Introduction

Simple IoT enables you to add remote sensor data, telemetry, configuration, and device management to your project or product.

Implementing IoT systems is hard. Most projects take way longer and cost more than they should. The fundamental problem is getting data from remote locations (edge) to a place where users can access it (cloud). We also need to update data and configuration at the edge in real time from any location. Simple IoT is an attempt to solve these problems by embracing the fact that IoT systems are inherently distributed and building on simple concepts that scale.

Simple IoT provides:

- A single application with no dependencies that can be run in both cloud and edge instances

- efficient synchronization of data in both directions

- A flexible UI to view configuration and current values

- A rules engine that runs on all instances that can trigger notifications or set data

- Extensive support for Modbus - both server and client

- Support for the Linux 1-wire subsystem.

- flexible graph organization of instances, users, groups, rules, and configuration

- Integration with other services like InfluxDB, Victoria Metrics, and Twilio

- A system that is easy to extend in any language using NATS

- A number of useful Go packages to use in your custom application

See vision, architecture, and integration for addition discussion on these points.

See detailed documentation for installation, usage, and development information.

Motivation

This project was developed while building real-world IoT applications and has been driven by the following requirements:

- Data (state or configuration) can be changed anywhere — at edge devices or in the cloud and this data needs to be synchronized seamlessly between instances. Sensors, users, rules, etc. can all change data. Some edge systems have a local display where users can modify the configuration locally as well as in the cloud. Rules can also run in the cloud or on edge devices and modify data.

- Data bandwidth is limited in some IoT systems — especially those connected with Cat-M modems (< 100Kb/sec). Additionally, connectivity is not always reliable, and systems need to continue operating if not connected.

Core ideas

The process of developing Simple IoT has been a path of reducing what started as a fairly complex IoT system to simpler ideas. This is what we discovered along the way:

- Treat configuration and state data the same for purposes of storage and synchronization.

- Represent this data using simple types (Nodes and Points).

- Organize this data in a graph.

- All data flows through a message bus.

- Run the same application in the cloud and at the edge.

- Automatically sync common data between instances.

Design is the beauty of turning constraints into advantages.

- Ava Raskin

These constraints have resulted in Simple IoT becoming a flexible distributed graph database optimized for IoT datasets. We’ll explore these ideas more in the documentation.

Support, Community, Contributing, etc.

Pull requests are welcome - see development for more thoughts on architecture, tooling, etc. Issues are labeled with “help wanted” and “good first issue” if you would like to contribute to this project.

For support or to discuss this project, use one of the following options:

- Documentation

- Simple IoT community forum

- #simpleiot Slack channel is available on gophers.slack.com

- Open a GitHub issue

- Simple IoT YouTube channel

- Subscribe to our email newsletter for project updates.

If you use this project, please let us know! It is really helpful to hear from users.

License

Apache Version 2.0

Contributors

Thanks to contributors:

Made with contrib.rocks.

Installation

Simple IoT will run on the following systems:

- ARM/x86/RISC-V Linux

- MacOS

- Windows

The computer you are currently using is a good platform to start with as well as any common embedded Linux platform like the Raspberry PI.

If you needed an industrial class device, consider something from embeddedTS

like the TS-7553-V2.

The Simple IoT application is a self contained binary with no dependencies. Download the latest release for your platform and run the executable. Once running, you can log into the user interface by opening http://localhost:8118 in a browser. The default login is:

- user:

admin - pass:

admin

Simple IoT self-install (Linux only)

Simple IoT self-installation does the following:

- creates a Systemd service file

- creates a data directory

- starts and enables the service

To install as user, copy the siot binary to some location like

/usr/local/bin and then run:

siot install

To install as root:

sudo siot install

The default ports are used, so if you want something different, modify the

generated siot.service file.

Cloud/Server deployments

When on the public Internet, Simple IoT should be proxied by a web server like Caddy to provide TLS/HTTPS security. Caddy by default obtains free TLS certificates from Let’s Encrypt and ZeroSSL with automatic fallback if one provider fails.

There are Ansible recipes available to deploy Simple IoT, Caddy, InfluxDB, and Grafana that work on most Linux servers.

Video: Setting up a Simple IoT System in the cloud

Yocto Linux

Yocto Linux is a popular edge Linux solution. There is a BitBake recipe for including Simple IoT in Yocto builds.

Networking

By default, Simple IoT runs an embedded NATS server and the SIOT NATS client is

configured to connect to nats://127.0.0.1:4222.

Use Cases

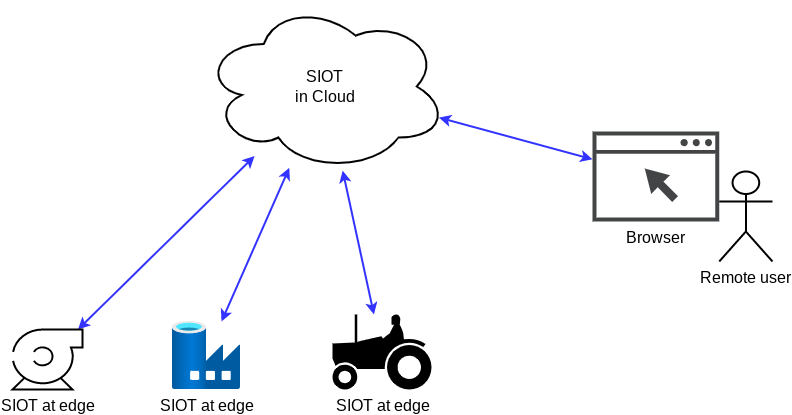

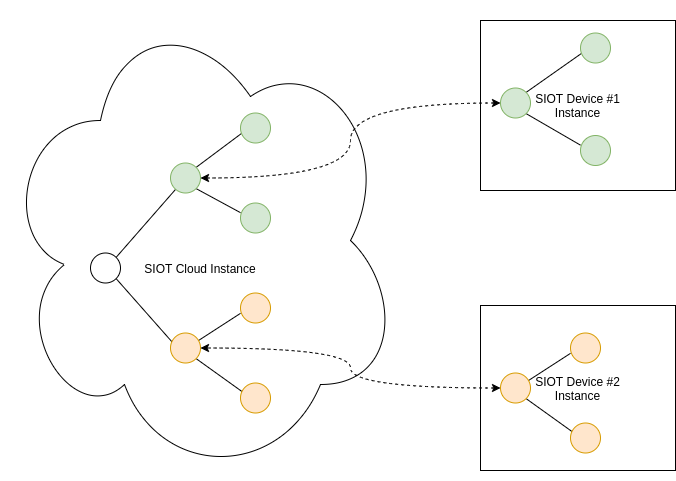

Simple IoT is platform that can be used to build IoT systems where you want to synchronize data between a number of distributed devices to a common central point (typically in the cloud). A common use case is connected devices where users want to remotely monitor and control these devices.

Some examples systems include:

- Irrigation monitoring

- Alarm/building control

- Industrial vehicle monitoring (commercial mowers, agricultural equipment, etc.)

- Factory automation

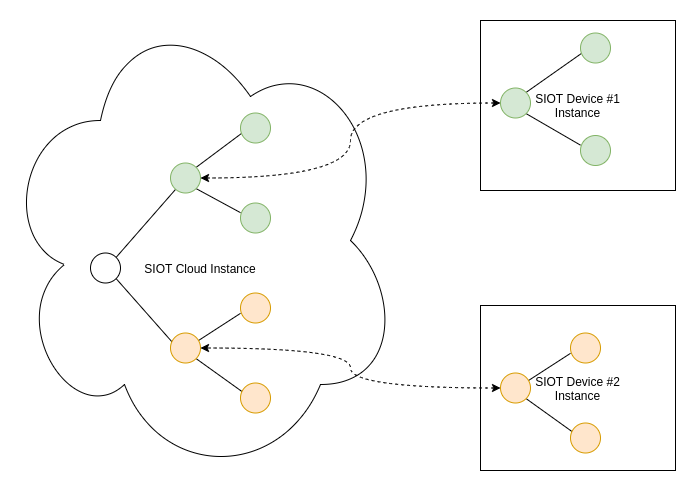

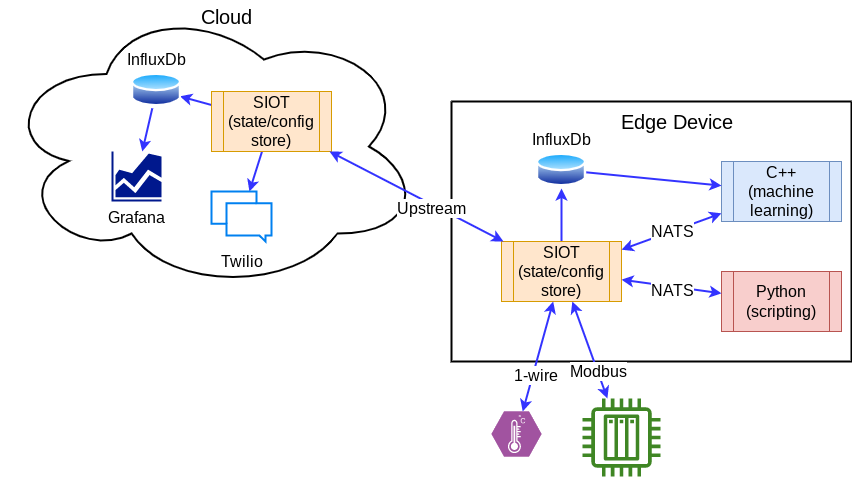

SIOT is optimized for systems where you run Embedded Linux at the edge and have fairly complex config/state that needs synchronized between the edge and the cloud.

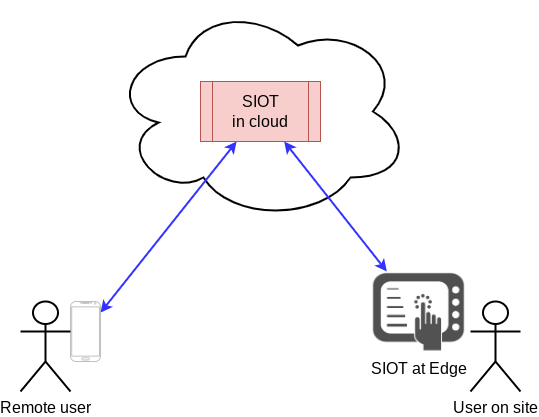

Changes can be made anywhere

Changes to config/state can be made locally or remotely in a SIOT system.

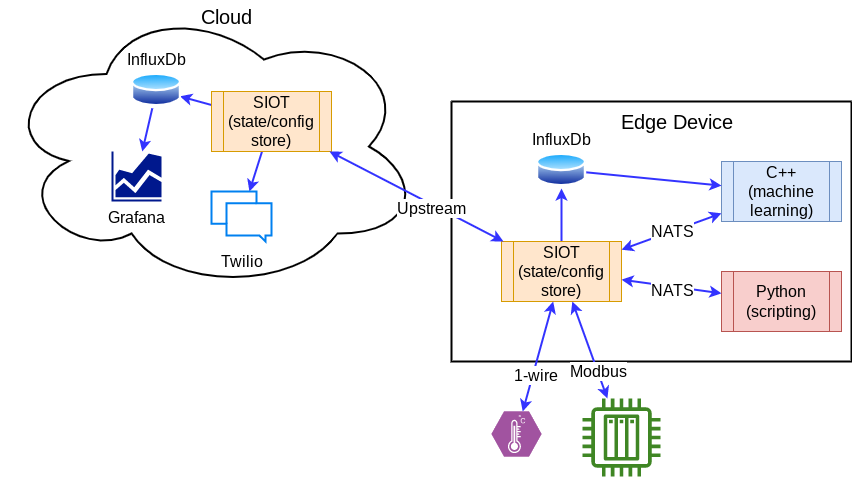

Integration

There are many ways to integrate Simple IoT with other applications.

There are cases where some tasks like machine learning are easier to do in languages like C++, then you can connect these applications to SIOT via NATS to access config/state. See the Integration reference guide for more detailed information.

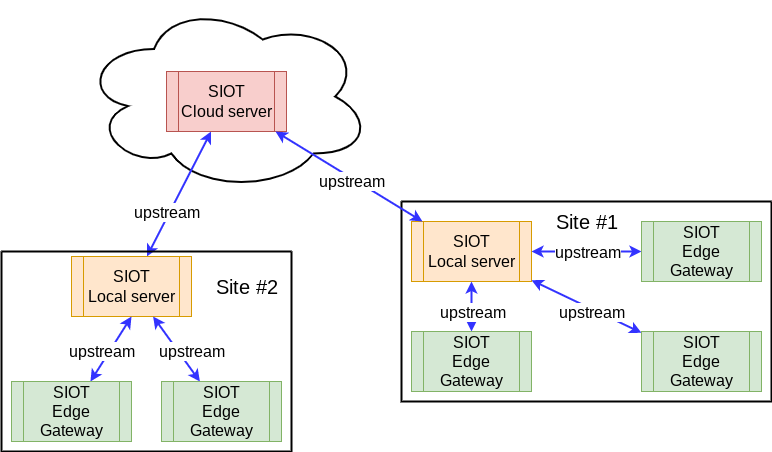

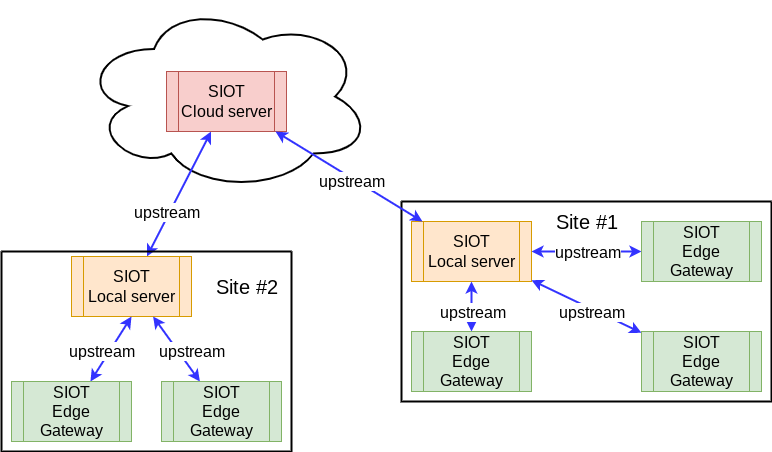

Multiple upstreams

Because we run the same SIOT application everywhere, we can add upstream instances at multiple levels.

This flexibility allows us to run rules and other logic at any level (cloud, local server, or edge gateway) - wherever it makes sense.

User Interface

Contents

Basic Navigation

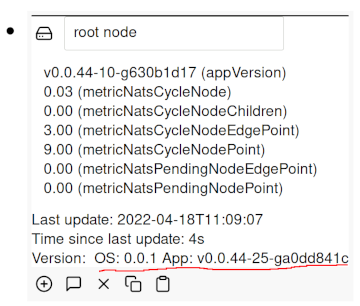

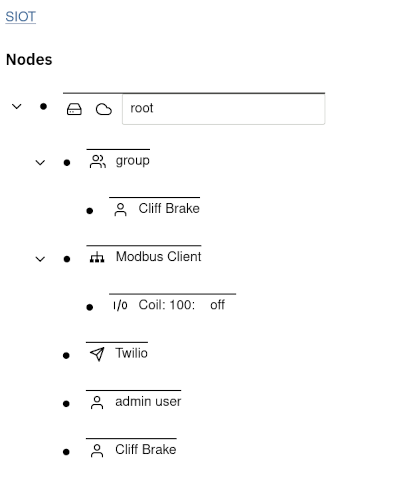

After Simple IoT is started, a web application is available on port :8118

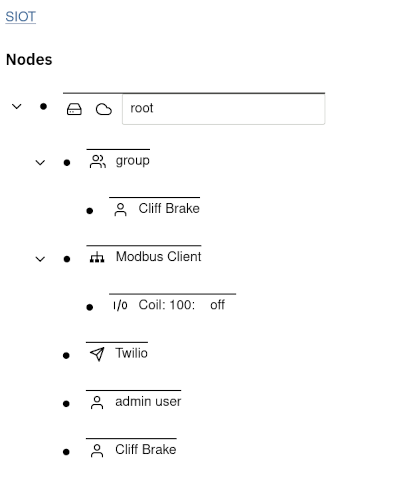

(typically http://localhost:8118). After logging in

(default user/pass is admin/admin), you will be presented with a tree of

nodes.

The Node is the base unit of configuration. Each node contains Points which

describe various attributes of a node. When you expand a node, the information

you see is a rendering of the point data in the node.

You can expand/collapse child nodes by clicking on the arrow

![]() to the left of a node.

to the left of a node.

You can expand/edit node details by clicking on the dot

![]() to the left of a node.

to the left of a node.

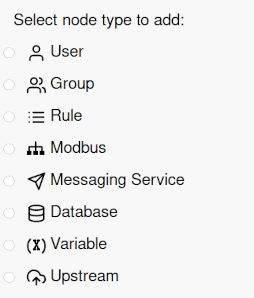

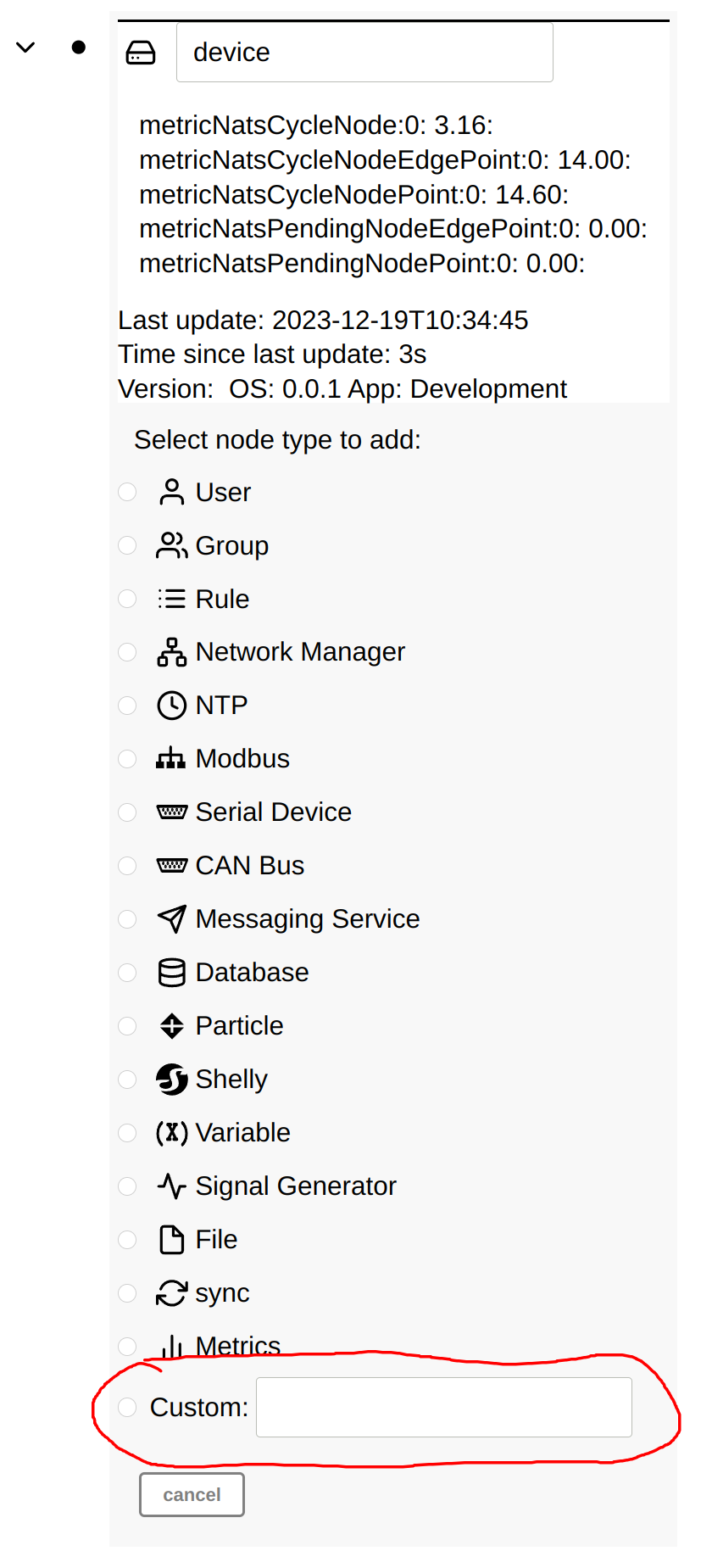

Adding nodes

Child nodes can be added to a node by clicking on the dot to expand the node, then clicking on the plus icon. A list of available nodes to add will then be displayed:

Some nodes are populated automatically if a new device is discovered, or a downstream device starts sending data.

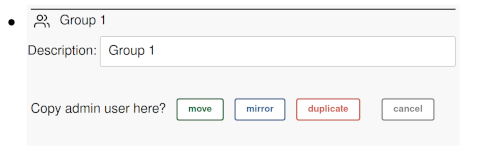

Deleting, Moving, Mirroring, and Duplicating nodes

Simple IoT provides the ability to re-arrange and organize your node structure.

To delete a node, expand it, and then press the delete

![]() icon.

icon.

To move or copy a node, expand it and press the copy

![]() icon. Then expand the destination node and

press the paste

icon. Then expand the destination node and

press the paste ![]() icon. You will then be

presented with the following options:

icon. You will then be

presented with the following options:

move- moves a node to new locationmirror- is useful if you want a user or device to be a member of multiple groups. If you change a node, all the mirror copies of the node update as well.duplicate- recursively duplicates the copied node plus all its descendants. This is useful for scenarios where you have a device or site configuration (perhaps a complex Modbus setup) that you want to duplicate at a new site.

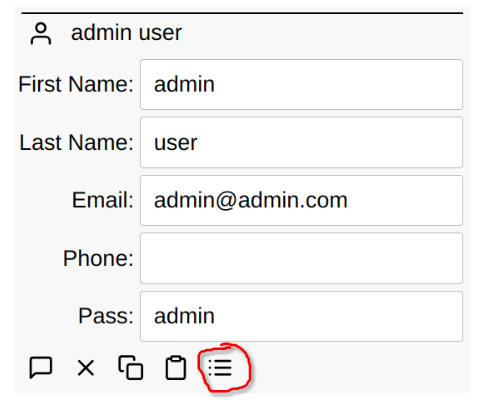

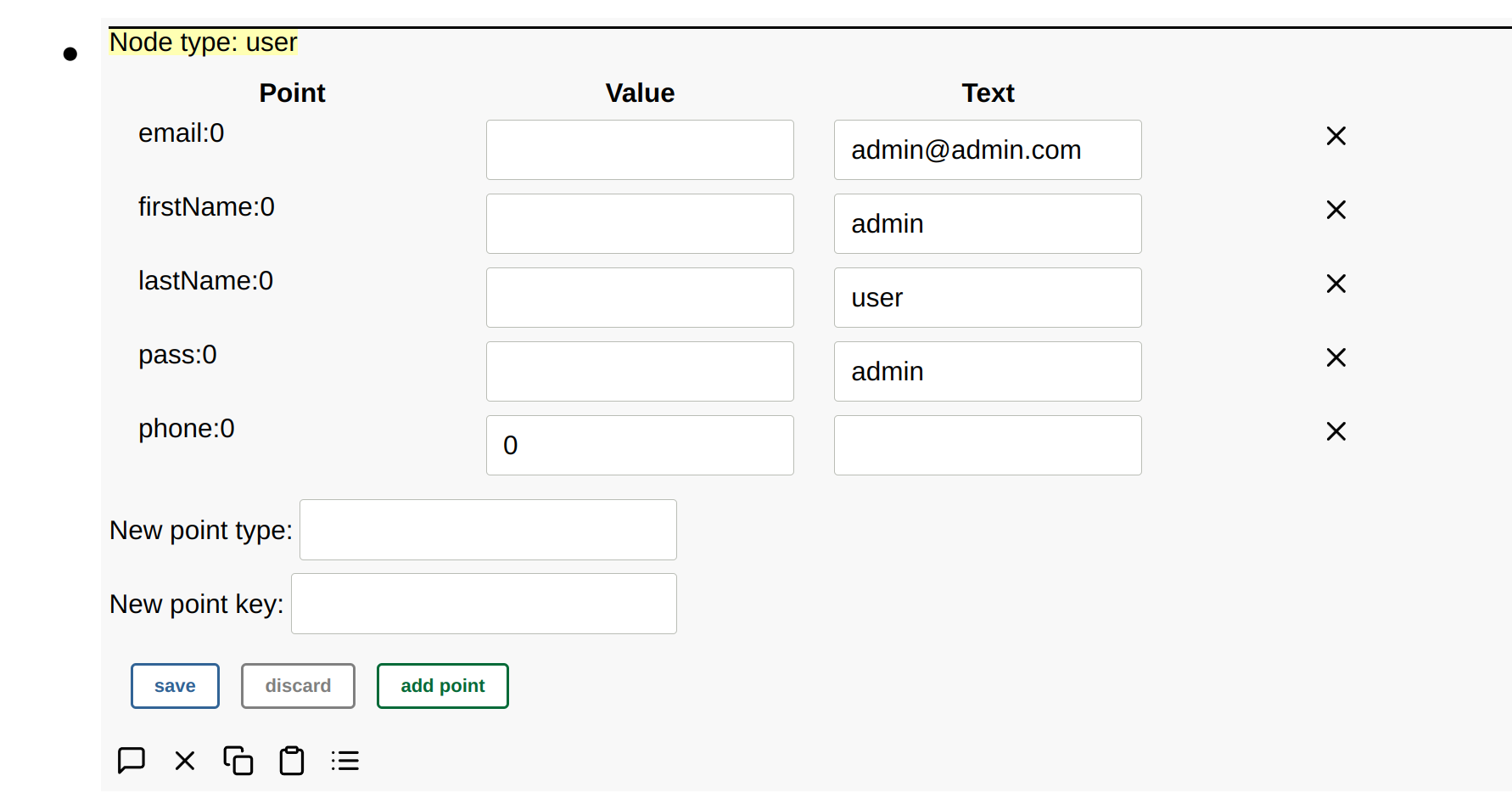

Raw Node View

If a node is expanded, a raw node button is available that allows you to view the raw type and points for any node in the tree. It is useful at times during development and debugging to be able to view the raw points for a node.

After the raw button is pressed, the type and points are displayed:

Unknown nodes will also be displayed as raw nodes.

Points can also be edited, added, or removed in raw mode.

A custom node type can also be added by specifying the node type when adding a node. This can be useful when developing new clients or external clients that run outside of the SImple IoT application.

Graphing and advanced dashboards

If you need graphs and more advanced dashboards, consider coupling Simple IoT with Grafana. Someday we hope to have dashboard capabilities built in.

Custom UIs

See the frontend reference documentation.

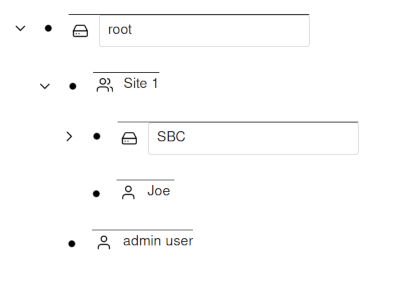

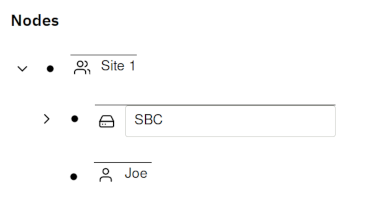

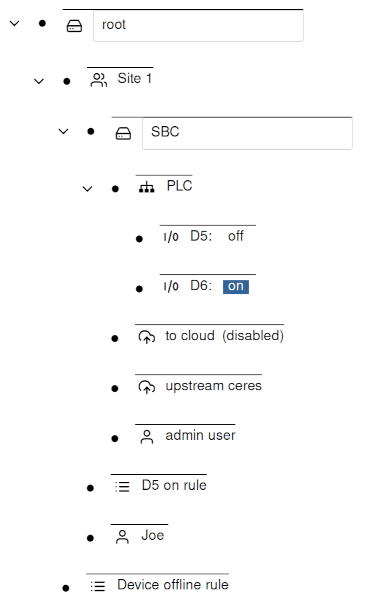

Users/Groups

Users and Groups can be configured at any place in the node tree. The way

permissions work is users have access to the parent node and the parent nodes

children. In the below example, Joe has access to the SBC device because

both Joe and SBC are members of the Site 1 group. Joe does have access

to the root node.

If Joe logs in, the following view will be presented:

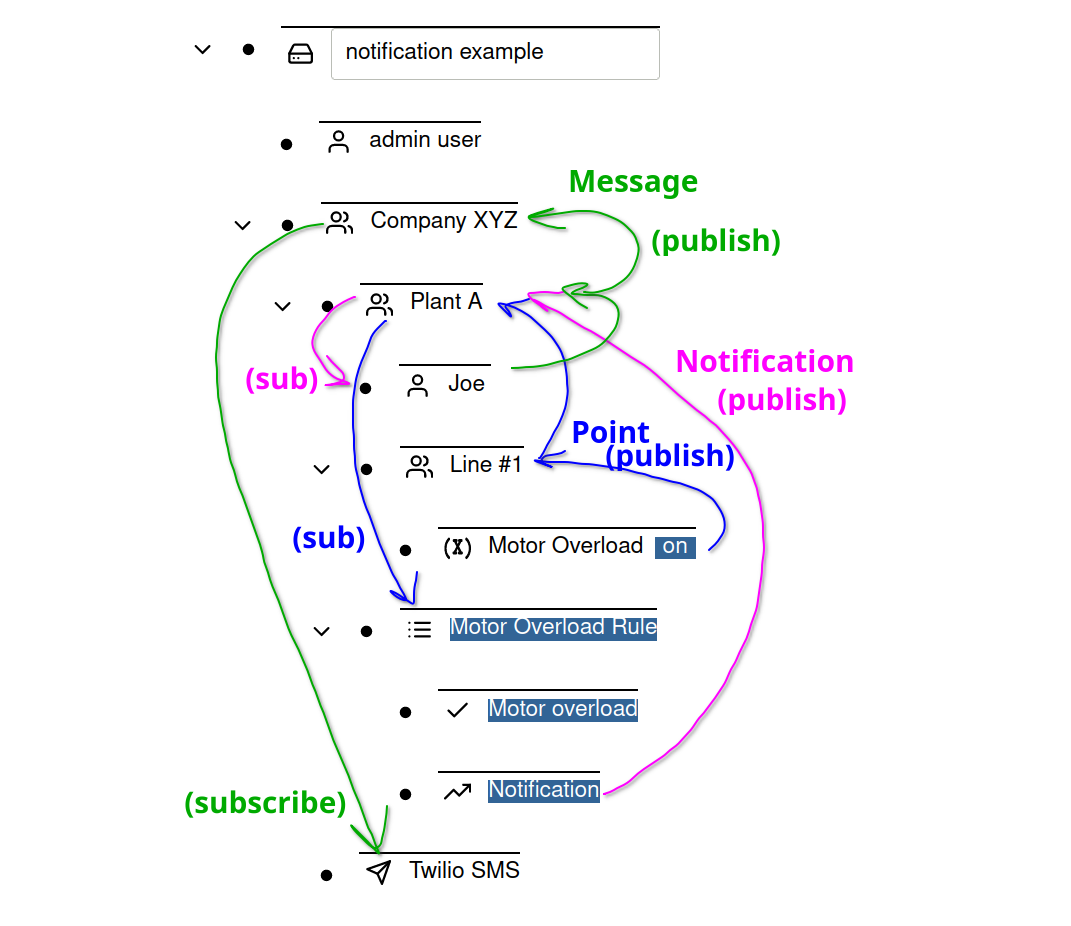

Notifications

Notifications are sent to users when a rule goes from inactive to active and contains a notification action. This notification travels up the node graph. At each parent node, users potentially listen for notifications. If a user is found, then a message is generated. This message likewise travels up the node graph. At each parent node, messaging service nodes potentially listen for messages and then process the message. Each node in Simple IoT that generates information is not concerned with the recipient of the information or how the information is used. This decoupling is the essence of messaging based systems (we use NATS) and is very flexible and powerful. Because nodes can be aliased (mirrored) to different places, this gives us a lot of flexibility in how points are processed. The node tree also gives us a very visual view of how things are connected as well as an easy way to expand or narrow scope based on high in the hierarchy a node is placed.

Example

There is hierarchy of nodes in this example system:

- Company

XYZ- Twilio SMS

Plant A- Joe

- Motor overload Rule

Line #1- Motor Overload

Plant B

The node hierarchy is used to manage scope and permissions. The general rule is

that a node has access to (or applies to) its parent nodes, and all of its

parents dependents. So in this example, Joe has access to everything in Plant

A, and likewise gets any Plant A notifications. The Motor overload rule also

applies to anything in Plant A. This allows us to write one rule that could

apply to multiple lines. The Twilio SMS node processes any messages generated in

Company XYZ including those generated in Plant A, Line #1, Plant B, etc.

and can be considered a company wide resource.

The process for generating an SMS notification to a user is as follows:

Line #1contains aMotor Overloadsensor. When this value changes, a point (blue) gets sent to its parentLine #1and then toPlant A. Although it is not shown below, the point also gets sent to theCompany XYZand root nodes. Points always are rebroadcast on every parent node back to the root.Plant Acontains a rule (Motor Overload) that is then run on the point, which generates a notification (purple) that gets sent back up to its parent (Plant A).Plant Acontains a userJoeso a notification + user generates a message (green), which gets sent back upstream toPlant Aand then toCompany XYZ.Company XYZcontains a messaging service (Twilio SMS), so the message gets processed by this service an SMS message gets sent toJoe.

The Motor Overload sensor node only generates what it senses. The Motor Overload

rule listens for points in Plant A (its parent) and processes those points.

The Joe user node listens for points at the Plant A node (its parent) and

processes any points that are relevant. The Twilio SMS node listens for point

changes at the Company XYZ node and processes those points. Information only

travels upstream (or up the node hierarchy).

In this example, the admin user does not receive notifications from the Twilio SMS messaging service. The reason is that the Twilio SMS node only listens for messages on its parent node. It does not have visibility into messages sent to the root node. With the node hierarchy, we can easily partition who gets notified. Additional group layers can be added if needed. No explicit binding is required between any of the nodes - the location in the graph manages all that. The higher up you go, the more visibility and access a node has.

Clients

Simple IoT is a framework that allows for clients to be added to manage IO, run rules, process data, etc. See documentation for individual clients. If you would like to develop a custom client, see the client reference documentation.

CAN Bus Client

The CAN client allows loading a standard CAN database file, receiving CAN data, and translating the CAN data into points via the database.

Usage

The CAN client can be used as part of the SimpleIoT library or through the web UI. The first step in either case is to create a CAN database in .kbc format.

Create the CAN Database

Create a file in the folder with the Go code named “test.kcd” containing the following:

<NetworkDefinition xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="http://kayak.2codeornot2code.org/1.0" xsi:schemaLocation="Definition.xsd">

<Document name="Some Document Name">some text</Document>

<Bus name="sampledatabase">

<Message id="0x123" name="HelloWorld" length="8">

<Notes></Notes>

<Signal name="Hello" offset="0" length="8"/>

<Signal name="World" offset="8" length="8"/>

</Message>

<Message id="0x12345678" name="Food" length="8" format="extended">

<Notes></Notes>

<Signal name="State" offset="0" length="32"/>

<Signal name="Type" offset="32" length="32"/>

</Message>

</Bus>

</NetworkDefinition>

You can create any CAN database you want by crafting it in Kvaser’s free DBC

editor and then using the canmatrix tool to convert it to KCD format. Note

that canmatrix does not support all features of the DBC and KCD formats.

Next, setup the virtual SocketCan interface.

Setup Virtual CAN Interface

Run this in the command line. Reference

sudo modprobe vcan

sudo ip link add dev vcan0 type vcan

sudo ip link set up vcan0

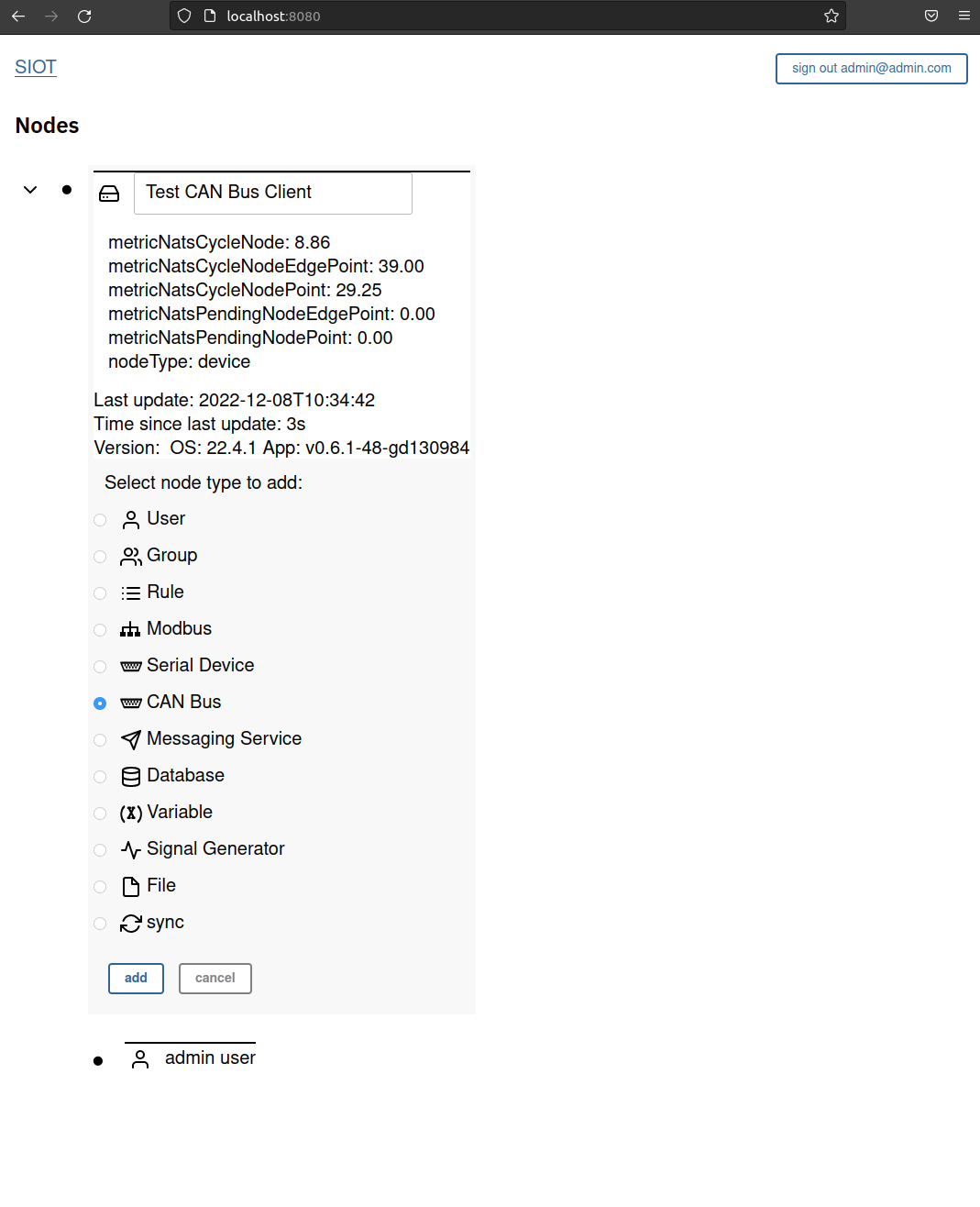

Option #1 - Use In Web UI

Follow the instructions to install SimpleIoT, run it, and navigate to the web UI.

Expand the root node and click the + symbol to add a sub node. Select “CAN Bus” and click “add”.

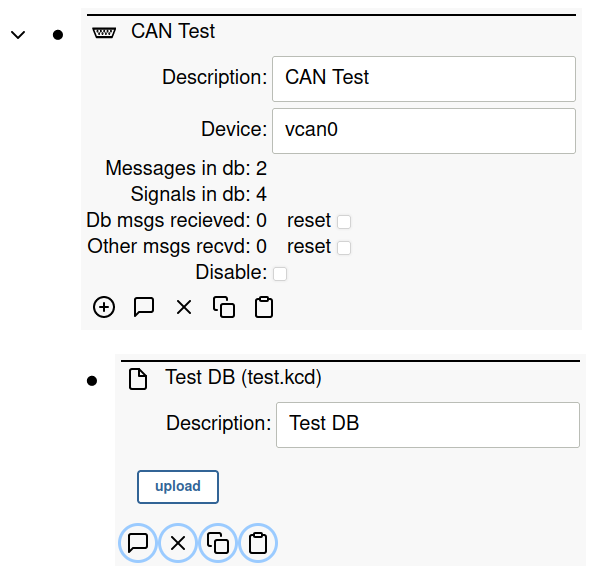

Configure the CAN Bus node with a File subnode and upload the .kcd

file you created.

Once the file has been uploaded, you should see the following stats in the CAN bus node:

Messages in db: 2 Signals in db: 4

Test with Messages

In a separate terminal:

cansend vcan0 123#R{8}

cansend vcan0 12345678#DEADBEEF

Ensure that there are no errors logged in the terminal by the application.

In the Web UI you should see the "Db msgs received" field increase to 2.

Option #2 - Use As Library

Copy this code to a Go file on your Linux machine in a folder by itself.

package main

import (

"log"

"github.com/nats-io/nats.go"

"github.com/simpleiot/simpleiot/client"

"github.com/simpleiot/simpleiot/data"

"github.com/simpleiot/simpleiot/server"

)

// exNode is decoded data from the client node

type exNode struct {

ID string `node:"id"`

Parent string `node:"parent"`

Description string `point:"description"`

Port int `point:"port"`

Role string `edgepoint:"role"`

}

// exNodeClient contains the logic for this client

type exNodeClient struct {

nc *nats.Conn

config client.SerialDev

stop chan struct{}

stopped chan struct{}

newPoints chan client.NewPoints

newEdgePoints chan client.NewPoints

chGetConfig chan chan client.SerialDev

}

// newExNodeClient is passed to the NewManager() function call -- when

// a new node is detected, the Manager will call this function to construct

// a new client.

func newExNodeClient(nc *nats.Conn, config client.SerialDev) client.Client {

return &exNodeClient{

nc: nc,

config: config,

stop: make(chan struct{}),

newPoints: make(chan client.NewPoints),

newEdgePoints: make(chan client.NewPoints),

}

}

// Start runs the main logic for this client and blocks until stopped

func (tnc *exNodeClient) Run() error {

for {

select {

case <-tnc.stop:

close(tnc.stopped)

return nil

case pts := <-tnc.newPoints:

err := data.MergePoints(pts.ID, pts.Points, &tnc.config)

if err != nil {

log.Println("error merging new points:", err)

}

log.Printf("New config: %+v\n", tnc.config)

case pts := <-tnc.newEdgePoints:

err := data.MergeEdgePoints(pts.ID, pts.Parent, pts.Points, &tnc.config)

if err != nil {

log.Println("error merging new points:", err)

}

case ch := <-tnc.chGetConfig:

ch <- tnc.config

}

}

}

// Stop sends a signal to the Run function to exit

func (tnc *exNodeClient) Stop(err error) {

close(tnc.stop)

}

// Points is called by the Manager when new points for this

// node are received.

func (tnc *exNodeClient) Points(id string, points []data.Point) {

tnc.newPoints <- client.NewPoints{id, "", points}

}

// EdgePoints is called by the Manager when new edge points for this

// node are received.

func (tnc *exNodeClient) EdgePoints(id, parent string, points []data.Point) {

tnc.newEdgePoints <- client.NewPoints{id, parent, points}

}

func main() {

nc, root, stop, err := server.TestServer()

if err != nil {

log.Println("Error starting test server:", err)

}

defer stop()

canBusTest := client.CanBus{

ID: "ID-canBus",

Parent: root.ID,

Description: "vcan0",

Device: "vcan0",

}

err = client.SendNodeType(nc, canBusTest, "test")

if err != nil {

log.Println("Error sending CAN node:", err)

}

// Create a new manager for nodes of type "testNode". The manager looks for new nodes under the

// root and if it finds any, it instantiates a new client, and sends point updates to it

m := client.NewManager(nc, newExNodeClient)

m.Start()

// Now any updates to the node will trigger Points/EdgePoints callbacks in the above client

}

Run the following commands:

go mod init example.com/mgo run <file>.go- Run the

go getcommands suggested bygo run go mod tidygo run <file>.go

Run it!

go run <file.go>

Follow instructions from the “Test with Messages” section above.

Future Work

- Scale and translate messages based on scale and offset parameters in database

- Auto connect to CAN bus in case it is brought up after SIOT client is started

- Attempt to bring up CAN bus within client, handle case where it is already up

- Support multiple CAN database files per node (be selective in which internal db is updated when a name or data point is received in the client)

- Support sending messages (concept of nodes and send/receive pulled from databases??)

- Support

.dbcfile format in addition to.kcd - Add the concept of a device to the CAN message points

File

The file node can be used to store files that are then used by other nodes/clients. Some examples include the CAN and Serial clients.

The default max payload of NATS is 1MB, so that is currently the file size limit, but NATS can be configured for a payload size up to 64MB. 8MB is recommended.

See the Frontend documentation for more information how the file UI is implemented.

If the Binary option is selected, the data is base64 encoded before it is

transmitted and stored.

Database Client

The main SIOT store is SQLite. SIOT supports additional database clients for purposes such as storing time-series data.

InfluxDB 2.x

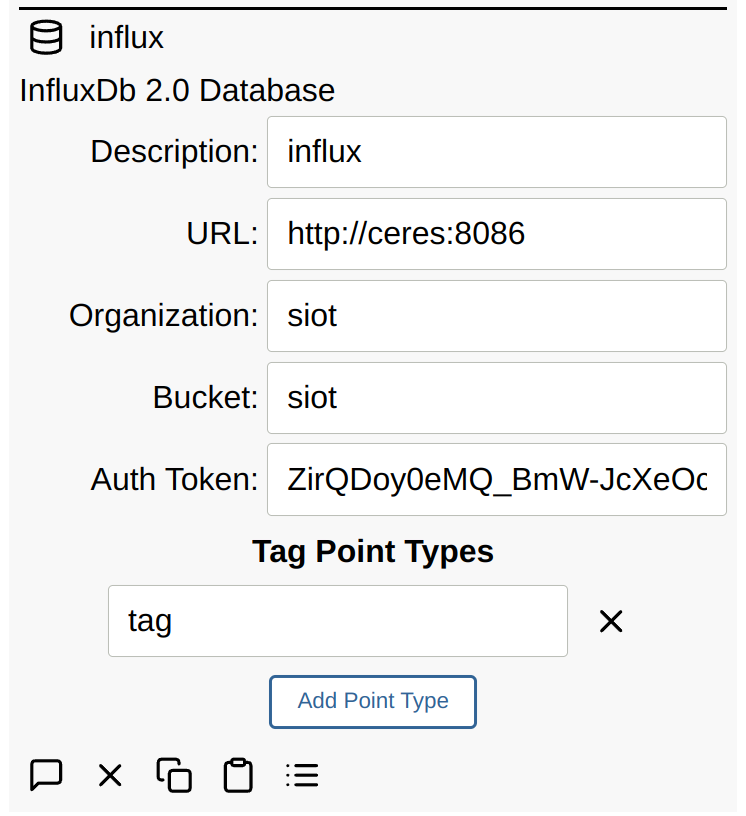

Point data can be stored in an InfluxDB 2.0 Database by adding a Database node:

The following InfluxDB tags are added to every point:

node.id(typically an UUID)node.type(extracted from the type field in the edge data structure)node.description(generated from thedescriptionpoint from the node)

Custom InfluxDB Tags

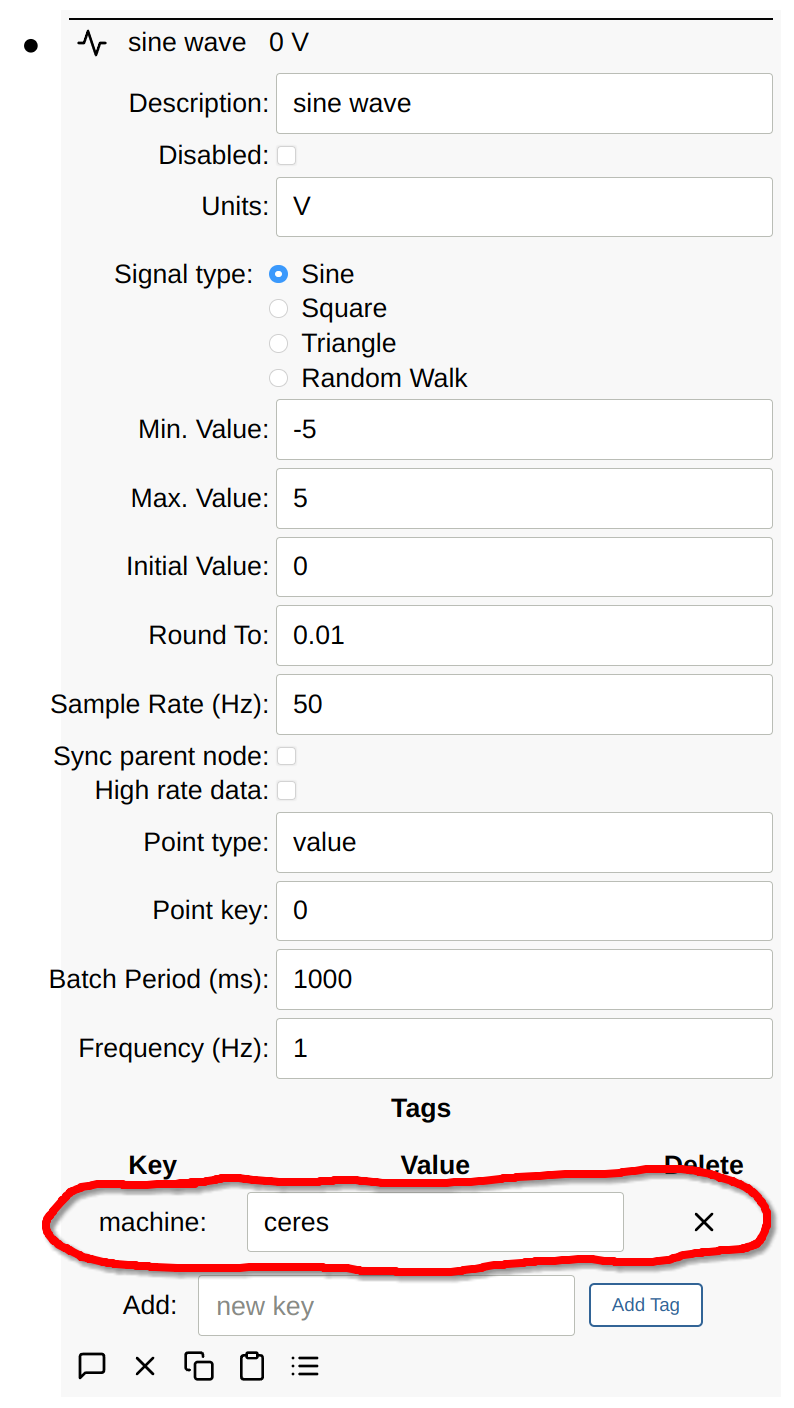

Additional tag tag points can be specified. The DB client will query and cache

node points of these types for any point flowing through the system and then

InfluxDB tags in the format: node.<point type>.<point key>. In the below

example, we added a machine tag to the signal generator node generating the

data.

When the tag field is specified in the database node, this machine tag is

now added to the Influx tags for every sample.

valueandtypeand fields from the pointnode.descriptionandnode.typeare automatically addednode.tag.machinegot added because thetagpoint was added to the list of node points that get added as tags.

See the Graphing documentation for information on how to automatically map tags to graph labels.

InfluxDB indexes tags, so generally there is not a huge cost to adding tags to samples as the long string is only stored once.

Victoria Metrics

Victoria Metrics supports the InfluxDB version 2 line protocol; therefore, it can be used for numerical data. Victoria Metrics does not support storing strings.

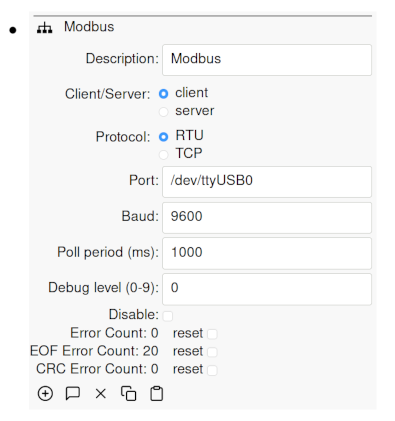

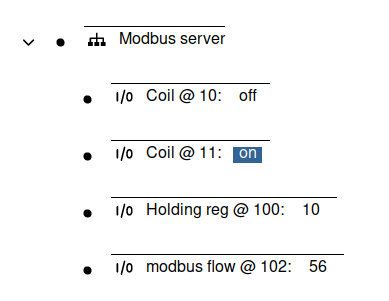

Modbus

Modbus is popular data communications protocol used for connecting industrial devices. The specification is open and available at the Modbus website. See also this Modbus Overview.

Simple IoT can function as both a Modbus client or server and supports both RTU and TCP transports. Modbus client/server is used as follows:

- Client: typically a PLC or Gateway - the device reading sensors and initiating Modbus transactions. This is the mode to use if you want to read sensor data and then process it or send to an upstream instance.

- Server: typically a sensor, actuator, or other device responding to Modbus requests. Functioning as a server allows SIOT to simulate Modbus devices or to provide data to another client device like a PLC.

Modbus is a prompt response protocol. With Modbus RTU (RS485), you can only have one client (gateway) on the bus and multiple servers (sensors). With Modbus TCP, you can have multiple clients and servers.

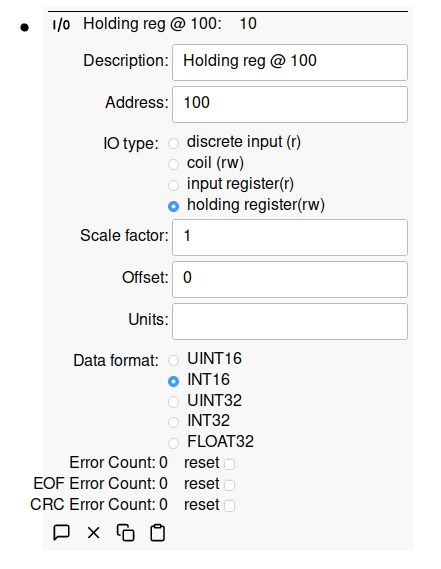

Modbus is configured by adding a Modbus node to the root node, and then adding IO nodes to the Modbus node.

The Response timeout parameter determines how long the Modbus client will wait

for a response from a device. The default is 100ms, which is adequate for most

devices, but it can be increased if you are communicating with a slow device.

Modbus IOs can be configured to support most common IO types and data formats:

Videos

Simple IoT Integration with PLC Using Modbus

Simple IoT upstream synchronization support

Simple IoT Modbus Demo

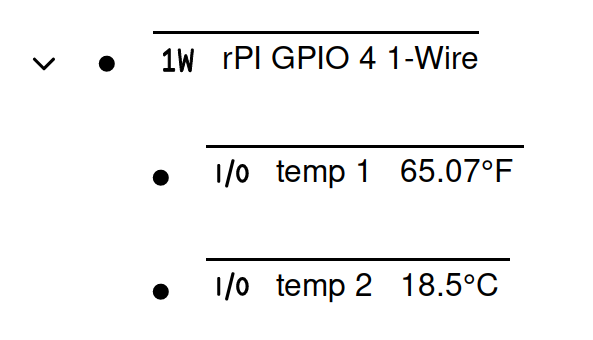

1-Wire

1-Wire is a device communication bus that provides low-speed data over a single conductor. It is also possible to power some devices over the data signal as well, but often a third wire is run for power.

Simple IoT supports 1-wire buses controlled by the

1-wire (w1) subsystem

in the Linux kernel. Simple IoT will automatically create nodes for 1-wire buses

and devices it discovers.

Bus Controllers

Raspberry PI GPIO

There are a number of bus controllers available but one of the simplest is a

GPIO on a Raspberry PI. To enable, add the following to the /boot/config.txt

file:

dtoverlay=w1-gpio

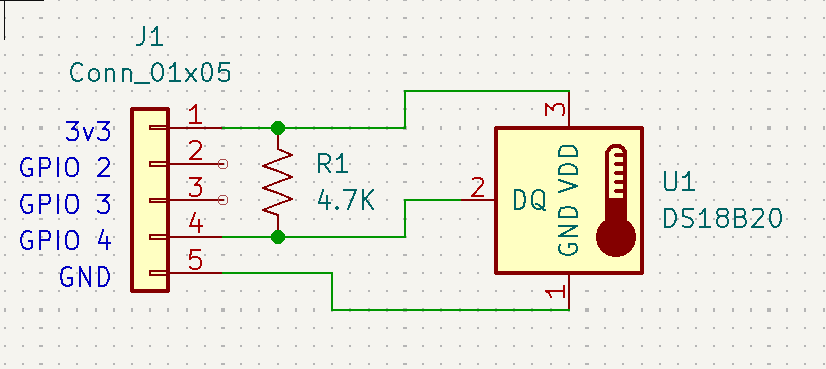

This enables a 1-wire bus on GPIO 4.

To add a bus to a different pin:

dtoverlay=w1-gpio,gpiopin=x

A 4.7kΩ pull-up resistor is needed between the 1-wire signal and 3.3V. This can be wired to a 0.1 inch connector as shown in the following schematic:

See this page for more information.

1-Wire devices

DS18B20 Temperature sensors

Simple IoT currently supports 1-wire temperature sensors such as the DS18B20.

This is a very popular and practical digital temperature sensor. Each sensor has

a unique address so you can address a number of them using a single 1-wire port.

These devices are readily available at low cost from a number of places

including eBay - search for DS18B20, and look for an image like the below:

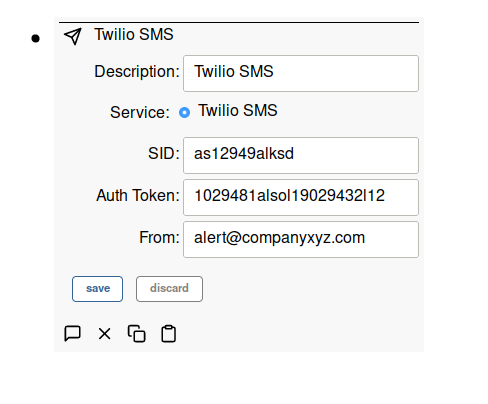

Messaging Services

SIOT can support multiple messaging services.

Twilio SMS Messaging

Simple IoT supports sending SMS messages using Twilio’s SMS service. Add a Messaging Service node and then configure.

Email Messaging

will be added soon …

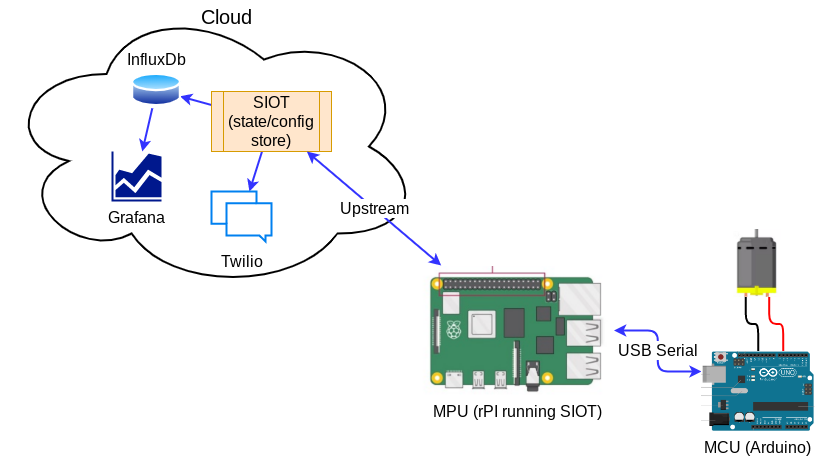

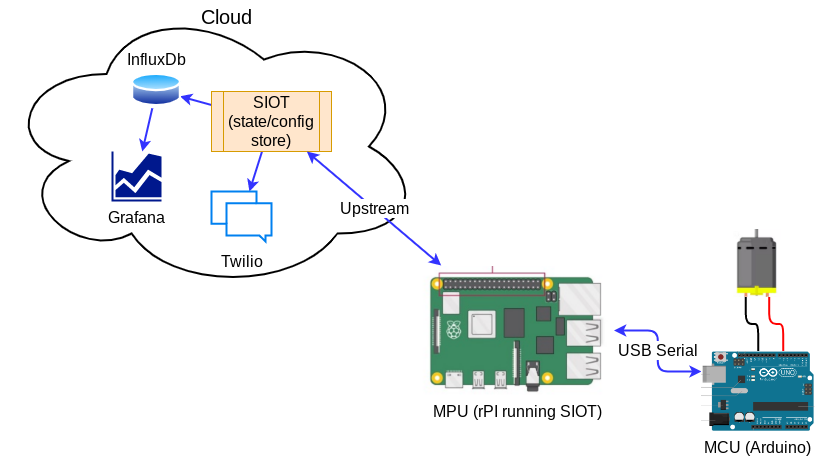

MCU Devices

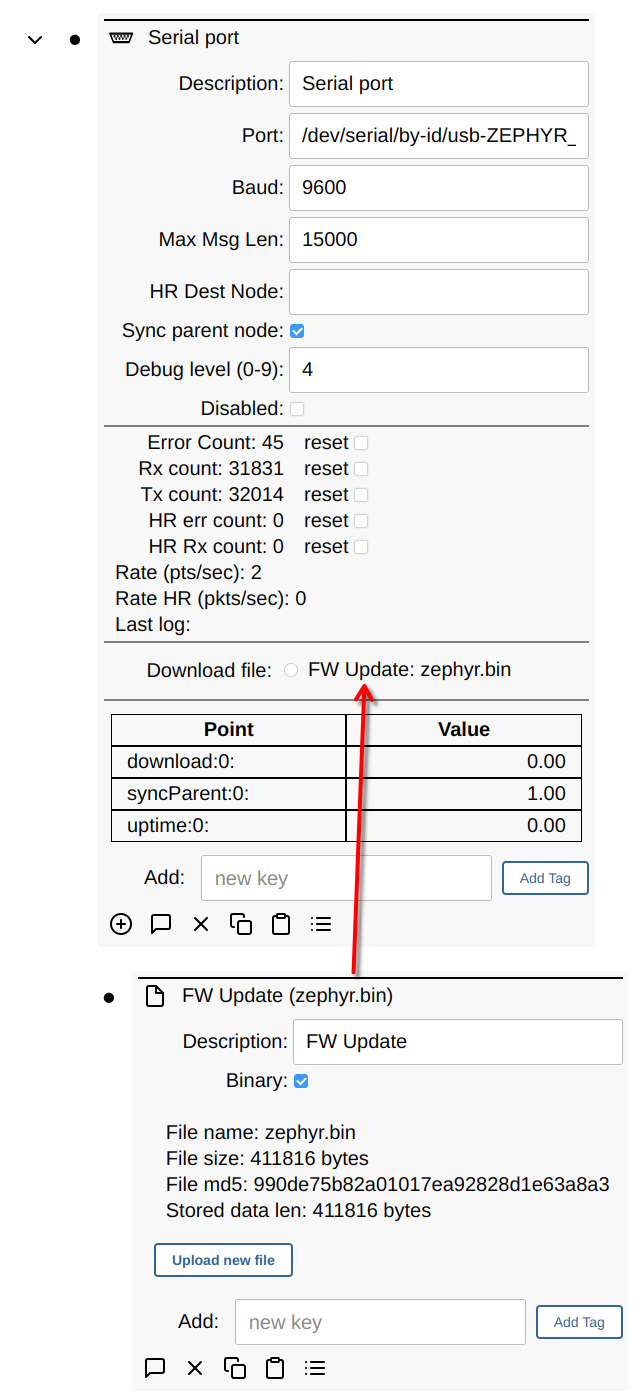

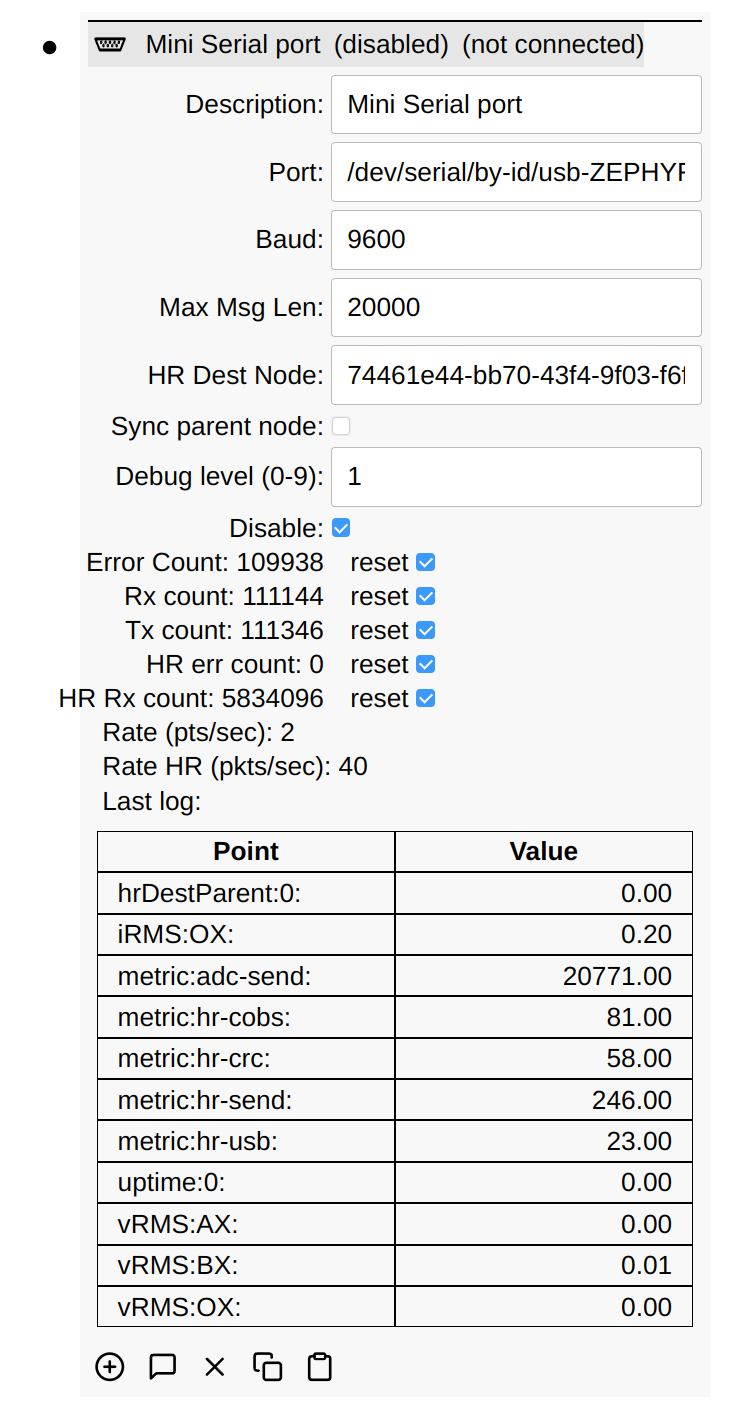

Microcontroller (MCU) devices can be connected to Simple IoT systems via various serial transports (RS232, RS485, CAN, and USB Serial). The Arduino platform is one example of an MCU platform that is easy to use and program. Simple IoT provides a serial interface module that can be used to interface with these systems. The combination of a laptop or a Raspberry PI makes a useful lab device for monitoring analog and digital signals. Data can be logged to InfluxDB and viewed in the InfluxDB Web UI or Grafana. This concept can be scaled into products where you might have a Linux MPU handling data/connectivity and a MCU doing real-time control.

See the Serial reference documentation for more technical details on this client.

File Download

Files (or larger chunks of data) can be downloaded to the MCU by adding a File node to the serial node. Any child File node will then show up as a download option.

Debug Levels

You can set the following debug levels to log information.

- 0: no debug information

- 1: log ASCII strings (must be COBS wrapped) (typically used for debugging code on the MCU)

- 4: log points received or sent to the MCU

- 8: log cobs decoded data (must be COBS wrapped)

- 9: log raw serial data received (pre-COBS)

Zephyr Examples

The zephyr-siot repository contains examples of MCU firmware that can interface with Simple IoT over serial, USB, and Network connections. This is a work in progress and is not complete.

Arduino Examples (no longer maintained)

Several Arduino examples are available that can be used to demonstrate this functionality.

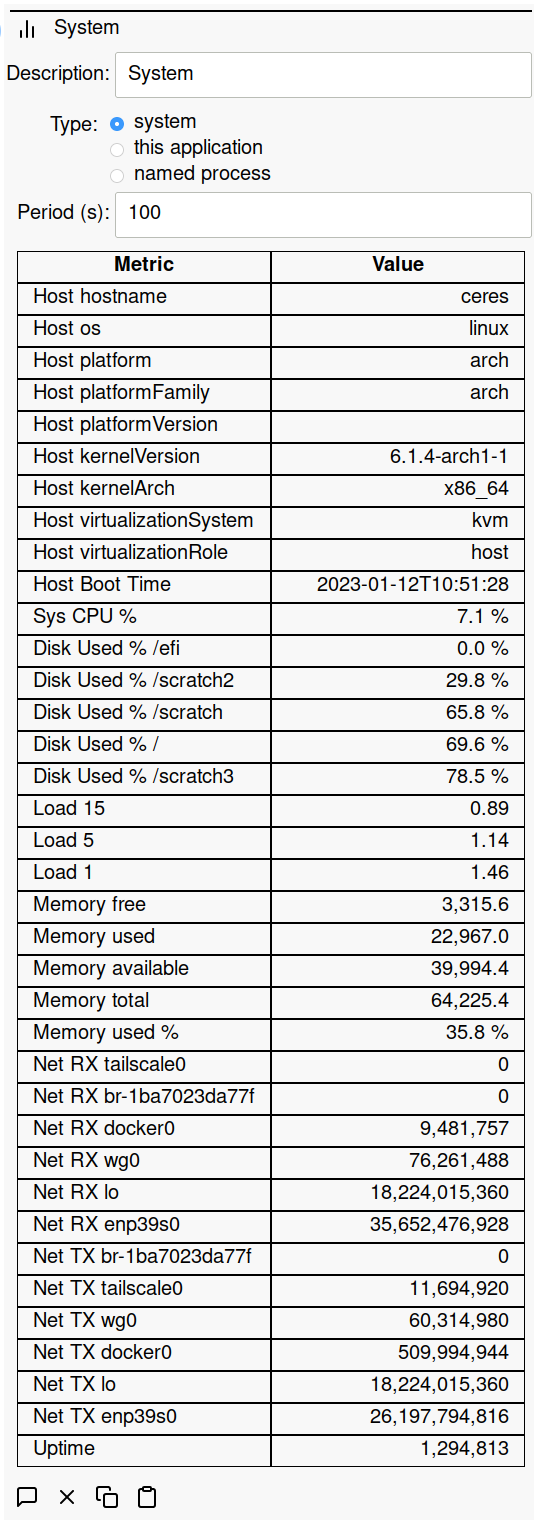

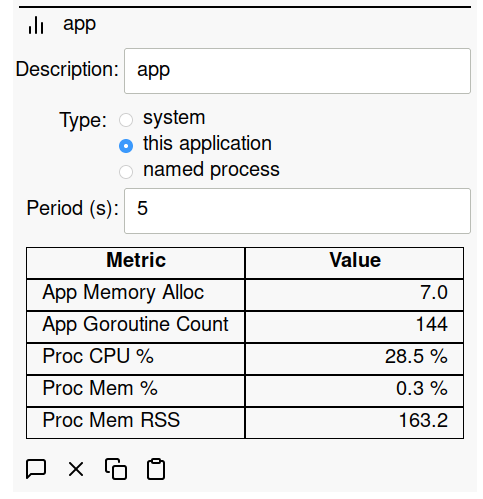

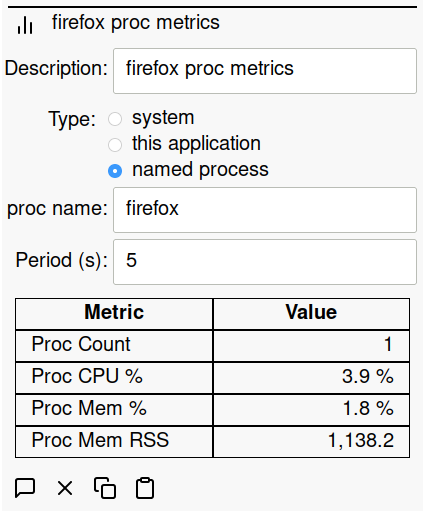

Metrics

An important part of maintaining healthy systems is to monitor metrics for the application and system. SIOT can collect metrics for:

- the system

- the SIOT application

- any named processes

For the named process, if there are multiple processes of the same name, then we add values for all processes found.

System Metrics

SIOT Application Metrics

Named Process Metrics

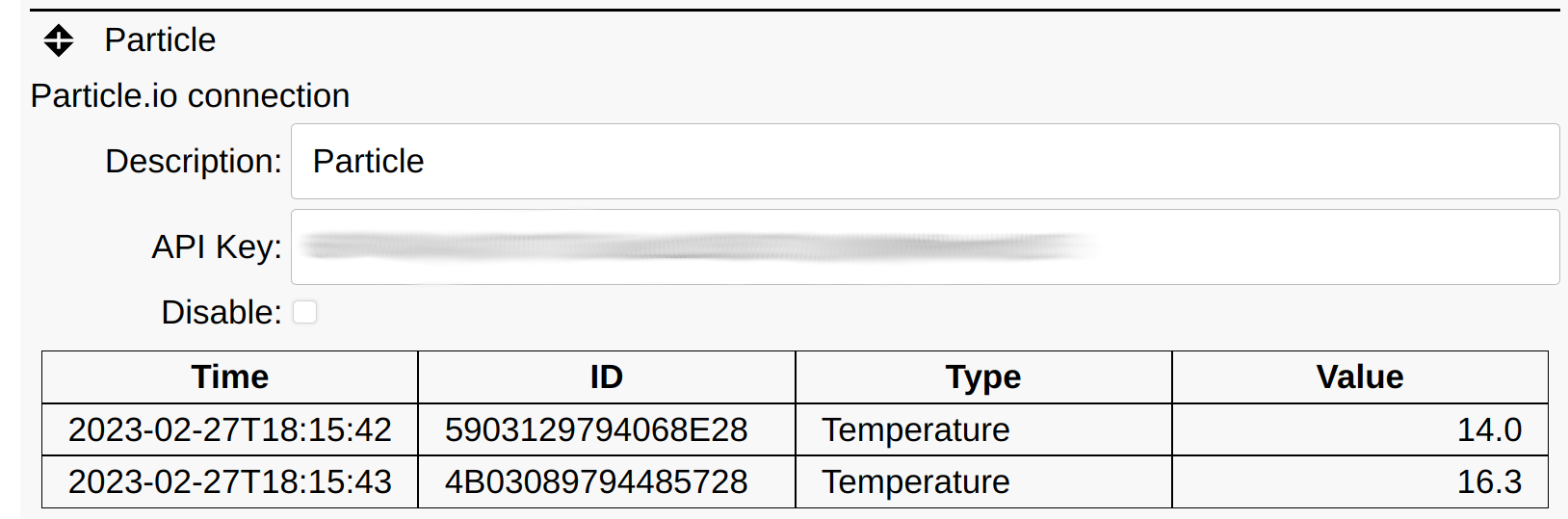

Particle.io

SIOT provides a client for pulling data from Particle.io. Particle provide modules to quickly implement cellular connected MCU based IoT systems. They take care of managing the device (cellular connection, firmware deployments, etc.), and you only need to write the application.

The Particle cloud event API is used to obtain the data. A connection is made from the SIOT instance to the Particle Cloud and then data is sent back to SIOT using Server Sent Events (SSE). The advantage of this mechanism is that complex webhooks are not needed on the SIOT side, which requires additional firewall/web server configuration.

A Particle API key is needed which can be generated using the particle token

CLI command.

The above example shows data provided by the Particle based Simple IoT Particle Gateway and 1-wire temperature sensors, and SIOT firmware.

Data is published to Particle in the following format:

[

{

"id": "4B03089794485728",

"type": "temp",

"value": 15.25

}

]

The SIOT Particle client populates the point key field with the 1-wire device

ID.

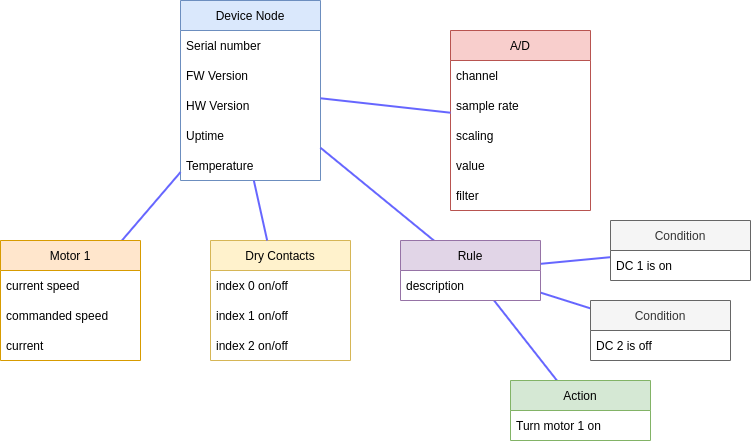

Rules

Contents

The Simple IoT application has the ability to run rules - see the video below for a demo:

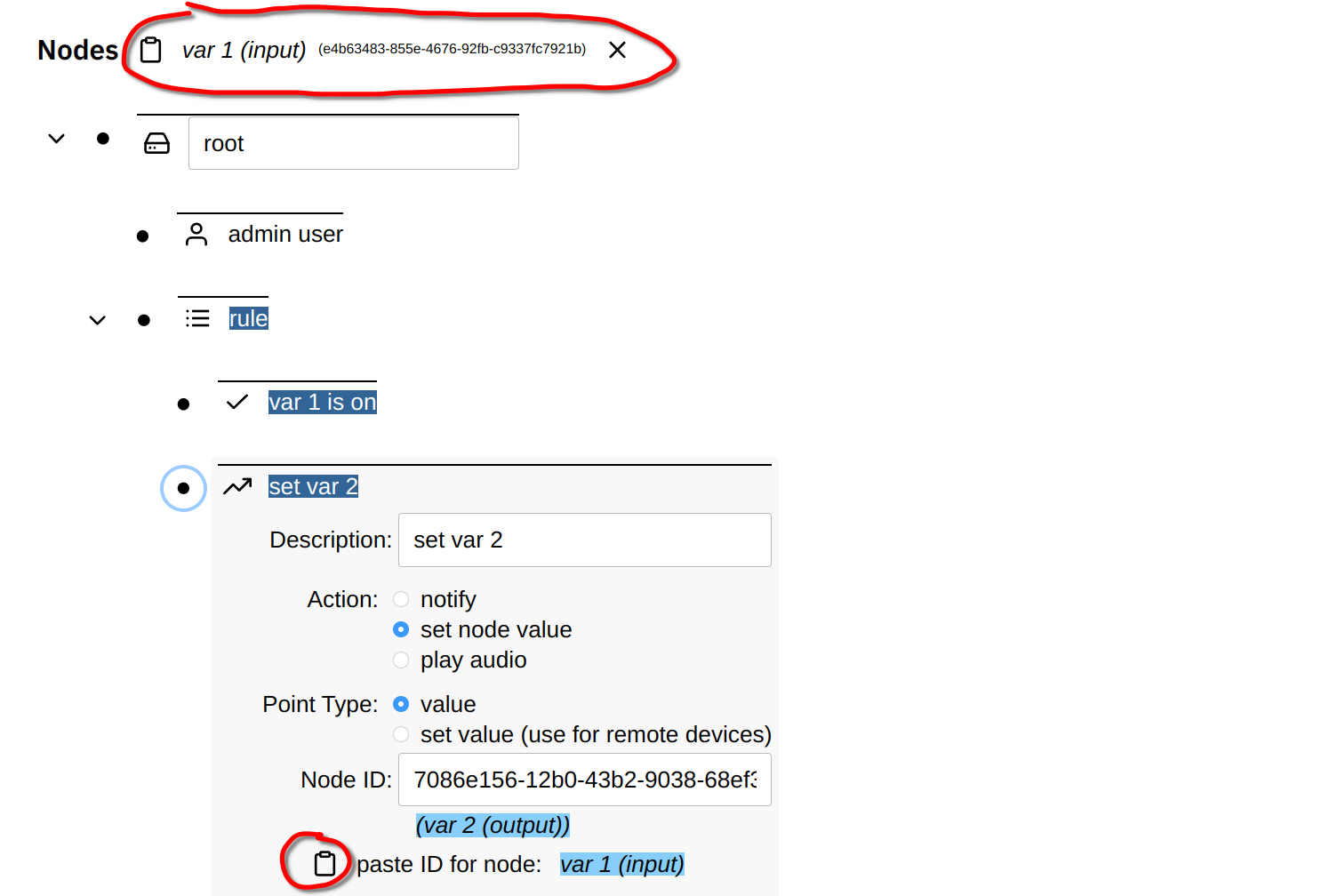

Rules are composed of one or more conditions and actions. All conditions must be true for the rule to be active.

Node point changes cause rules of any parent node in the tree to be run. This allows general rules to be written higher in the tree that are common for all device nodes (for instance device offline).

In the below configuration, a change in the SBC propagates up the node tree,

thus both the D5 on rule or the Device offline rule are eligible to be run.

Node linking

Both conditions and actions can be linked to a node ID. If you copy a node, its ID is stored in a virtual clipboard and displayed at the top of the screen. You can then paste this node ID into the Node ID field in a condition or action.

Conditions

Each condition may optionally specify a minimum active duration before the condition is considered met. This allows timing to be encoded in the rules.

Node state

A point value condition looks at the point value of a node to determine if a condition is met. Qualifiers that filter points the condition is interested in can set including:

- Node ID (if left blank, any node that is a descendant of the rule parent)

- Point type (“value” is probably the most common type)

- Point Key (used to index into point arrays and objects)

If the provided qualification is met, then the condition may check the point value/text fields for a number of conditions including:

- number:

>,<,=,!= - text:

=,!=,contains - boolean:

on,off

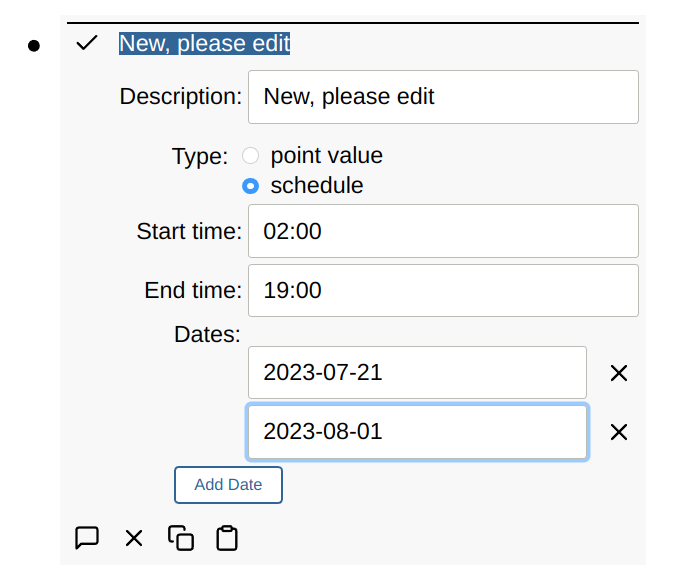

Schedule

Rule conditions can be driven by a schedule that is composed of:

- start/stop time

- weekdays

- dates

If no weekdays are selected, then all weekdays are included.

When the dates are used, then weekdays are disabled.

Conversely, when a weekday is enabled, dates are disabled.

As a time range can span two days, the start time is used to qualify weekdays and dates.

See also a video demo:

Actions

Every action has an optional repeat interval. This allows rate limiting of actions like notifications.

Notifications

Notifications are the simplest rule action and are sent out when:

- All conditions are met

- Time since last notification is greater than the notify action repeat interval.

Every time a notification is sent out by a rule, a point is created/updated in the rule with the following fields:

id: node of point that triggered the ruletype: “lastNotificationSent”time: time the notification was sent

Before sending a notification we scan the points of the rule looking for when the last notification was sent to decide if its time to send it.

Set node point

Rules can also set points in other nodes. For simplicity, the node ID must be currently specified along with point parameters and a number/bool/text value.

Typically a rule action is only used to set one value. In the case of on/off actions, one rule is used to turn a value on, and another rule is used to turn the same value off. This allows for hysteresis and more complex logic than in one rule handled both the on and off states. This also allows the rules logic to be stateful. If you don’t need hysteresis or complex state, the rule “inactive action” can be used, which allows the rule to take action when it goes both active and inactive.

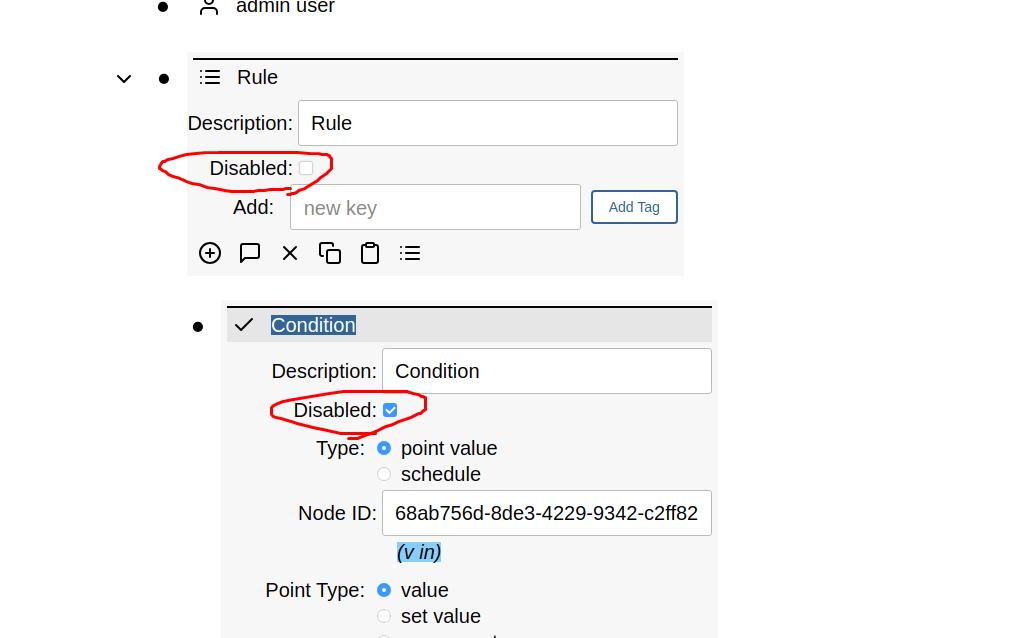

Disable Rule/Condition/Action

Disable Rule

A rule can be disabled. If the rule is disabled while active, then the rule inactive actions are run so that things get cleaned up if necessary and the actions are not left active.

Disable Condition

If there are no conditions, or all conditions are disabled, the rule is inactive. Otherwise, disabled conditions are simply ignored. For example, if there is a disabled condition and a non-disabled active condition, the rule is active.

Disable Action

A disabled action is not run.

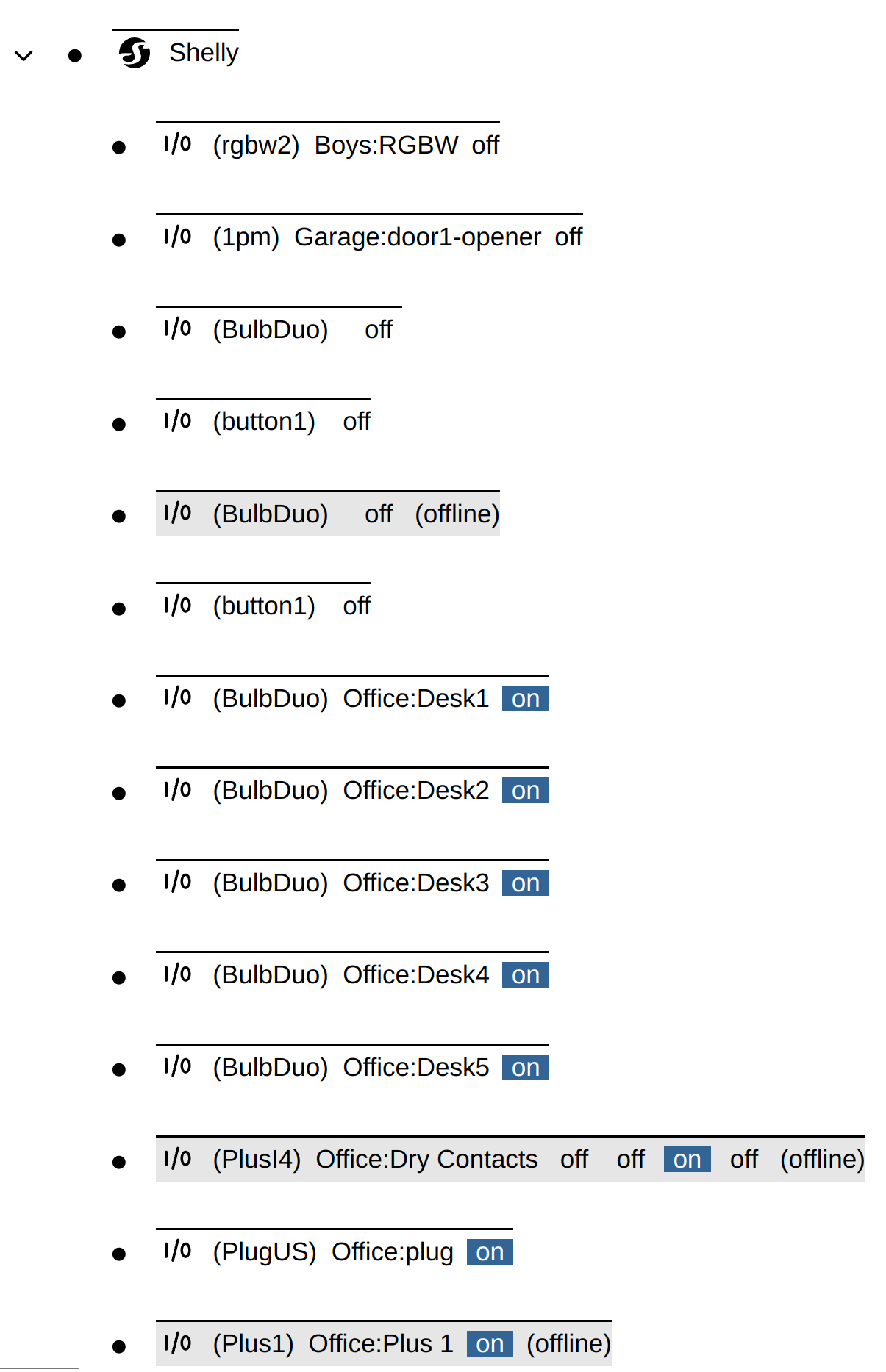

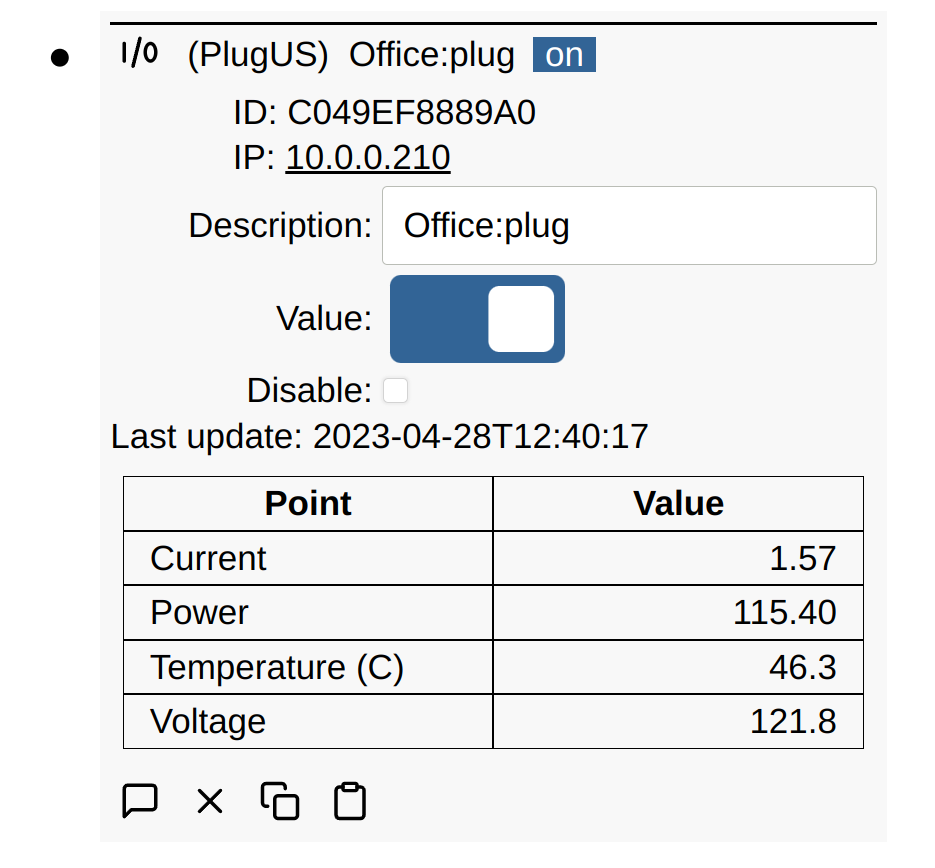

Shelly IoT

Shelly sells a number of reasonably priced open IoT devices for home automation and industrial control. Most support Wi-Fi network connections and some of the Industrial line also supports Ethernet. The API is open and the devices support a number of communication protocols including HTTP, MQTT, CoAP, etc. They also support mDNS so they can be discovered on the network.

Simple IoT provides the following support:

- Automatic discovery of all Shelly devices on the network using mDNS

- Support for the following devices:

1pm(not tested)Bulb Duo(on/off only)Plus 1Plus 1PM(not tested)Plus 2PMPlus Plug(only US variant tested)- Measurements such as Current, Power, Temp, Voltage are collected.

Plus i4

- Currently status is polled via HTTP every 2 seconds

Setup

- Configure the Shelly devices to connect to your Wi-Fi network. There are

several options:

- Use the Shelly phone app

- A new device will start up in access point mode. Attach a computer or phone to this AP, open http://192.168.33.1 (default address of a reset device), and then configure the Wi-Fi credentials using the built-in Web UI.

- Add the Shelly client in SIOT

- The Shelly client will then periodically scan for new devices and add them as child nodes.

Example

Plug Example

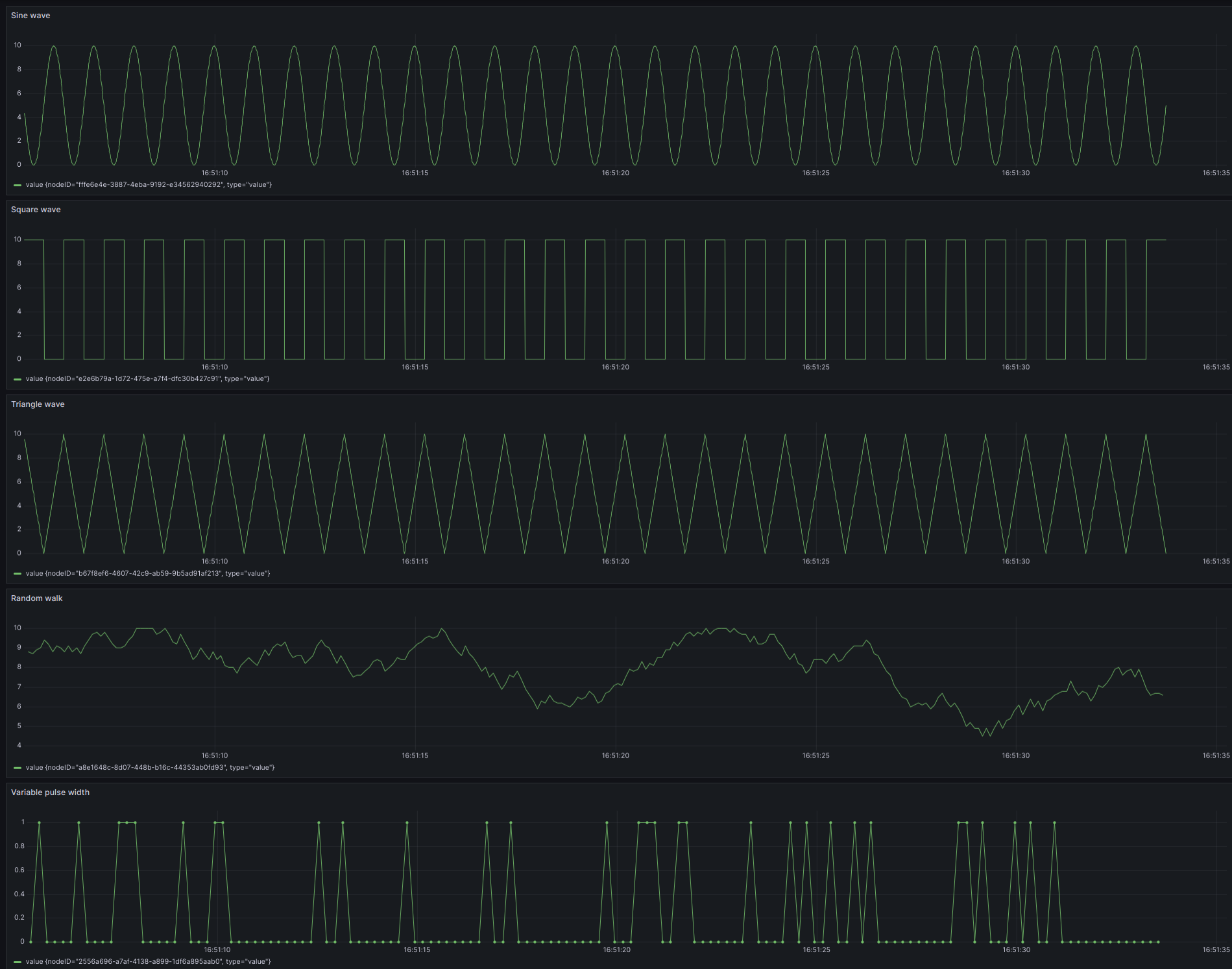

Signal Generator Client

The signal generator can be used to generate various signals including:

- Sine wave

- Square wave

- Triangle wave

- Random walk

Below is a screen-shot of the generated data displayed in Grafana.

Configuration

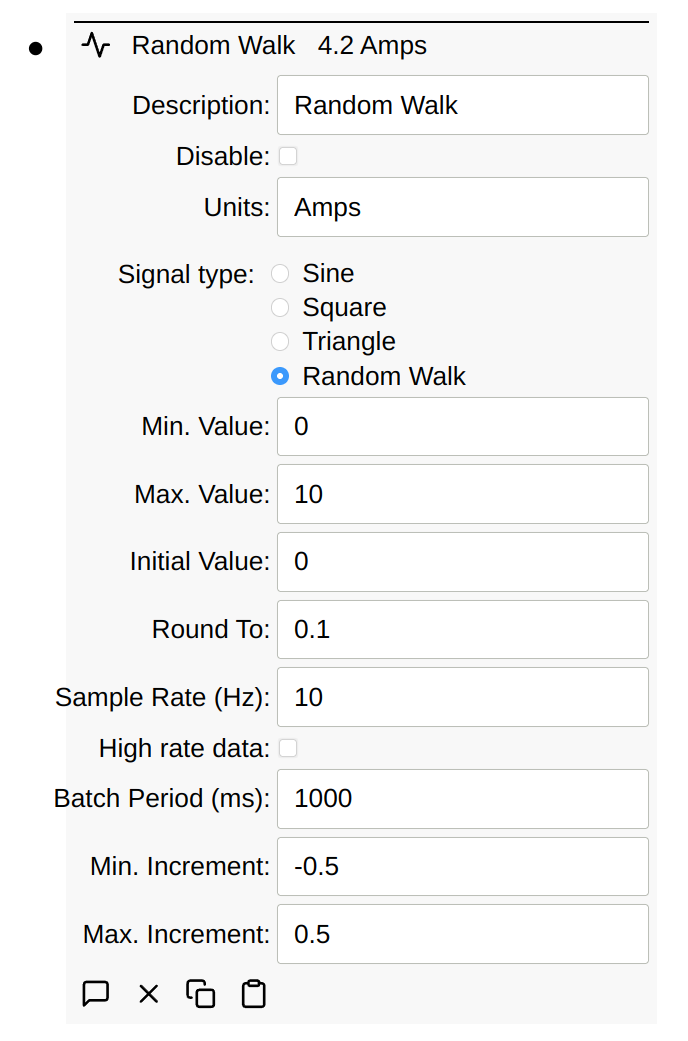

The signal generated can be configured with the following parameters:

Most of the parameters are self-explanatory. With a Random Walk, you typically need to enter a negative number for the minimum. Increment as shown above. This causes the negative number generated to be negative roughly half the time.

The rounding can also be used to generate binary signals. Imagine a signal generator with these settings:

Max. value= 1Min. value= 0Initial value= 0Round to= 1Min. increment= -7Max. increment= 3Sample Rate= 20 milliseconds

Due to min/max/round to options, this is a binary value, either 0 or 1, biased

toward 0 (due to min/max increment options). This could be useful for

simulating binary switches or something like it. Effectively, this will hold the

value for at least 20m and picks a random number between -7 and 3. Due to

rounding, if value is currently 0, there’s a 25% chance it becomes 1. If 1,

there’s a 65% chance it becomes 0. This means that the value will be 0 roughly

91.25% (= 75% + (1 - 75%) * 65%) of the time.

Schema

Below is an export of several types of signal generator nodes:

children:

- id: 2556a696-a7af-4138-a899-1df6a895aab0

type: signalGenerator

points:

- type: batchPeriod

value: 1000.0

- type: description

text: Variable pulse width

- type: disabled

- type: frequency

value: 1.0

- type: initialValue

text: "0"

- type: maxIncrement

value: 3.0

- type: maxValue

value: 1.0

- type: minIncrement

value: -7.0

- type: minValue

text: "0"

- type: roundTo

value: 1.0

- type: sampleRate

value: 5.0

- type: signalType

text: random walk

- type: units

text: Amps

- type: value

value: 1.0

- id: b67f8ef6-4607-42c9-ab59-9b5ad91af213

type: signalGenerator

points:

- type: batchPeriod

value: 1000.0

- type: description

text: Triangle

- type: disabled

- type: frequency

value: 1.0

- type: initialValue

text: "0"

- type: maxIncrement

value: 0.5

- type: maxValue

value: 10.0

- type: minIncrement

value: 0.1

- type: minValue

text: "0"

- type: sampleRate

value: 100.0

- type: signalType

text: triangle

- type: value

value: 6.465714272450723e-12

- id: e2e6b79a-1d72-475e-a7f4-dfc30b427c91

type: signalGenerator

points:

- type: batchPeriod

value: 1000.0

- type: description

text: Square

- type: disabled

- type: frequency

value: 1.0

- type: initialValue

text: "0"

- type: maxValue

value: 10.0

- type: minValue

text: "0"

- type: sampleRate

value: 100.0

- type: signalType

text: square

- type: value

value: 10.0

- id: fffe6e4e-3887-4eba-9192-e34562940292

type: signalGenerator

points:

- type: batchPeriod

value: 1000.0

- type: description

text: Sine

- type: disabled

- type: frequency

value: 1.0

- type: initialValue

text: "0"

- type: maxValue

value: 10.0

- type: minValue

text: "0"

- type: sampleRate

value: 100.0

- type: signalType

text: sine

- type: value

value: 4.999999999989843

- id: a8e1648c-8d07-448b-b16c-44353ab0fd93

type: signalGenerator

points:

- type: batchPeriod

value: 1000.0

- type: description

text: Random Walk

- type: disabled

- type: frequency

value: 1.0

- type: initialValue

text: "0"

- type: maxIncrement

value: 0.5

- type: maxValue

value: 10.0

- type: minIncrement

value: -0.5

- type: minValue

text: "0"

- type: roundTo

value: 0.1

- type: sampleRate

value: 10.0

- type: signalType

text: random walk

- type: units

text: Amps

- type: value

value: 9.1

Synchronization

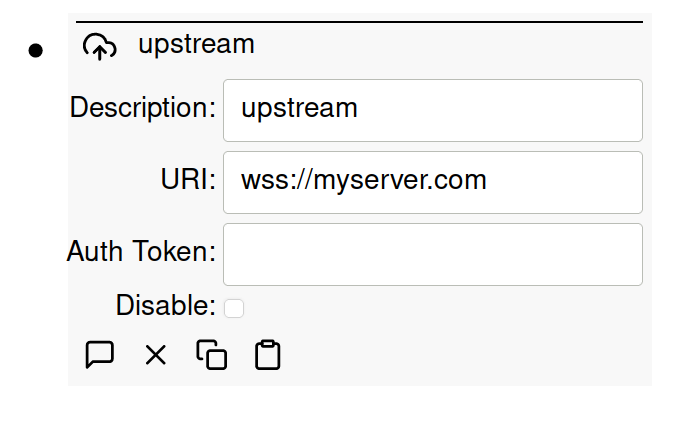

Simple IoT provides for synchronized upstream connections via NATS or NATS over WebSocket.

To create an upstream sync, add a sync node to the root node on the downstream

instance. If your upstream server has a name of myserver.com, then you can use

the following connections URIs:

nats://myserver.com:4222(4222 is the default NATS port)ws://myserver.com(WebSocket unencrypted connection)wss://myserver.com(WebSocket encrypted connection)

IP addresses can also be used for the server name.

Auth token is optional and needs to be configured in an environment variable for the upstream server. If your upstream is on the public internet, you should use an auth token. If both devices are on an internal network, then you may not need an auth token.

Typically, wss are simplest for servers that are fronted by a web server like

Caddy that has TLS certs. For internal connections, nats or ws connections

are typically used.

Occasionally, you might also have edge devices on networks where NATS outgoing

connections on port 4222 are blocked. In this case, it’s handy to be able to use

the wss connection, which just uses standard HTTP(S) ports.

Videos

There are also several videos that demonstrate upstream connections:

Simple IoT upstream synchronization support

Simple IoT Integration with PLC Using Modbus

Update

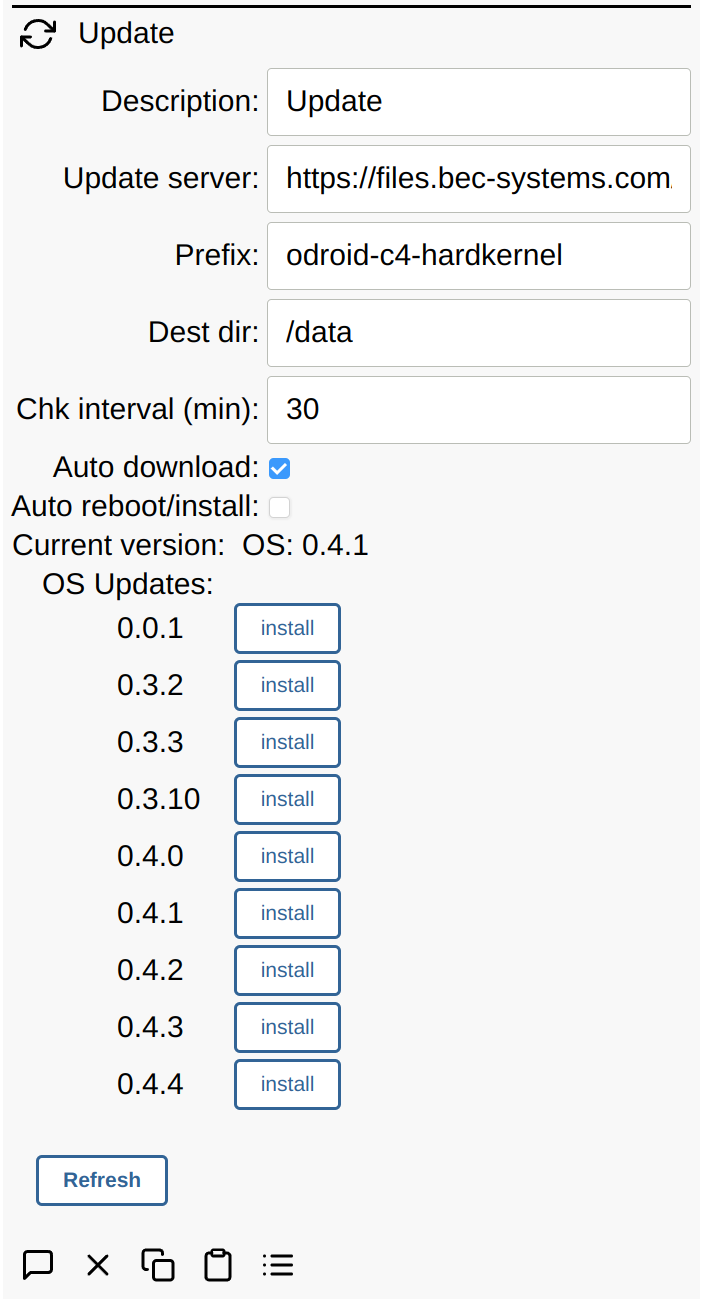

The Simple IoT update client facilitates updating software. Currently, it is designed to download images for use by the Yoe Updater. The process can be executed manually, or there are options to automatically download and install new updates.

There are several options:

- Update server: HTTP server that contains the following files:

- files.txt: contains a list of update files on the server

- update files named:

<prefix>_<version>.updversionshould follow Semantic Versioning:MAJOR.MINOR.PATCHprefixmust match what the updater on the target device is expecting typically host/machine name.

prefix: described above - typically host/machine name. This is auto detected on first startup, but can be changed if necessary.Dest dir: Destination directory for downloaded updates. Defaults to/data.Chk interval: time interval at which the client checks for new updates.Auto download: option to periodically check the server for new updates and download the latest version.Auto reboot/install: option to auto install/reboot if a new version is detected and downloaded.

USB

Browser

The browser client enables control and configuration of the

Yoe Kiosk Browser as it is

when installed as part of Yoe Distro. On changing the configuration, changes are

saved to /etc/default/yoe-kiosk-browser for the browser and

/etc/default/eglfs.json for EGLFS, and the yoe-kiosk-browser service is

restarted automatically.

Graphing Data

Simple IoT is designed to work with several other applications for storing time series data and viewing this data in graphs.

InfluxDB

InfluxDB is currently the recommended way to store historical data. This database is efficient and can run on embedded platforms like the Raspberry PI as well as desktop and server machines. To connect SIOT to InfluxDB, add a database node and fill in the parameters.

Grafana

Grafana is a very powerful graphing solution that works well with InfluxDB. Although InfluxDB has its own web interface and graphing capability, generally we find Grafana to be more full featured and easier to use.

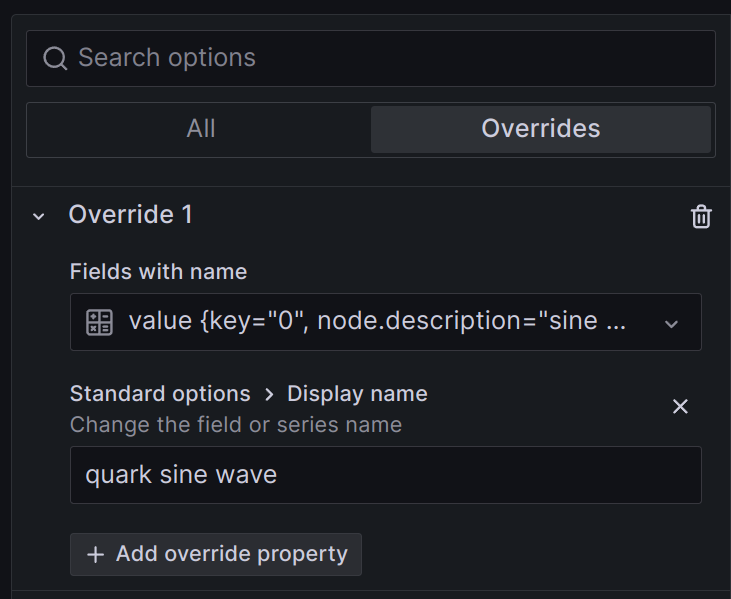

Changing the Display name (labels) in Grafana

Often with an Influx query, we’ll get trace display names that look like the below:

Often, much of this data is irrelevant or redundant with the query. One way to change the label is with an Override:

This can be tedious to set up and maintain.

Often a better way is to

add tags

to the nodes generating the data and then display the node tags in the display

name by using the Influx map function.

from(bucket: "siot")

|> range(start: v.timeRangeStart, stop:v.timeRangeStop)

|> filter(fn: (r) =>

r._measurement == "points" and

r._field == "value" and

r.type == "value")

|> filter(fn: (r) => r["node.type"] == "signalGenerator")

|> map(fn: (r) => ({_value:r._value, _time:r._time, _field:r["node.tag.machine"] + ":" + r["node.description"]}))

In this case we are displaying the node machine tag and description. The result is very nice:

Configuration

Environment variables

Environment variables are used to control various aspects of the application. The following are currently defined:

- General

SIOT_HTTP_PORT: HTTP network port the SIOT server attaches to (default is 8118)SIOT_DATA: directory where any data is storedSIOT_AUTH_TOKEN: auth token used for NATS and HTTP device API, default is blank (no auth)OS_VERSION_FIELD: the field in/etc/os-releaseused to extract the OS version information. Default isVERSION, which is common in most distros. The Yoe Distribution populatesVERSION_IDwith the update version, which is probably more appropriate for embedded systems built with Yoe. See ref/version.

- NATS configuration

SIOT_NATS_PORT: Port to run NATS on (default is 4222 if not set)SIOT_NATS_HTTP_PORT: Port to run NATS monitoring interface (default is 8222)SIOT_NATS_SERVER: defaults to nats://127.0.0.1:4222SIOT_NATS_TLS_CERT: points to TLS certificate file. If not set, TLS is not used.SIOT_NATS_TLS_KEY: points to TLS certificate keySIOT_NATS_TLS_TIMEOUT: Configure the TLS upgrade timeout. NATS defaults to a 0.5 second timeout for TLS upgrade, but that is too short for some embedded systems that run on low end CPUs connected over cellular modems (we’ve see this process take as long as 4 seconds). See NATS documentation for more information.SIOT_NATS_WS_PORT: Port to run NATS WebSocket (default is 9222, set to 0 to disable)

- Particle.io

SIOT_PARTICLE_API_KEY: key used to fetch data from Particle.io devices running Simple IoT firmware

Configuration export

Nodes can be exported to a YAML file. This is a useful to:

- Back up the current configuration

- Dump node data for debugging

- Transfer a configuration or part of a configuration from one instance to another

To export the entire tree:

siot export > backup.yaml

A subset of the tree can be exported by specifying the node ID:

siot export -nodeID 9d7c1c03-0908-4f8b-86d7-8e79184d441d > export.yaml

Configuration import

Nodes defined in a YAML file can be imported into a running SIOT instance using

the CLI, or the Go API. When using the CLI, the import file must be specified on

STDIN. If there are any node IDs in the import they are mapped to new IDs to

eliminate any possibility of ID conflicts if the config is imported into

multiple systems with a common upstream sync, etc.

If nodes reference each other (for instance a rule condition and a Modbus node), then friendly IDs can be used to make it easy to edit and reference. These friendly IDs will be replaced by a common UUID during import.

To import nodes at a specific location (typically a group), then you can specify the parent node ID. This ID can be obtained by expanding the node and clicking the copy button. This will put the ID into your system copy buffer.

siot import -parentID 9d7c1c03-0908-4f8b-86d7-8e79184d441d < import.yaml

If you want to wipe out any existing state and restore a SIOT to a known state,

you can run an import with the -parentID set to root. It is highly

recommended you restart SIOT after this is done to minimize the chance of any

code still running that caches the root ID which has now changed.

siot import -parentID root < backup.yaml

Again, by default, the import command will create new IDs to minimize the chance

of any ID conflicts. If you want to preserve the IDs in the YAML file, you can

specify the -preserveIDs option - WARNING, use this option with caution.

Importing a backup to root with -preserveIDs is a handy way to restore a

system to a known previous state. However, new nodes that don’t exist in the

backup will not be deleted - the import only adds nodes/points.

If authentication or a different server is required, this can be specified through command line arguments or the following environment variables (see descriptions above):

SIOT_NATS_SERVERSIOT_AUTH_TOKEN

It is easy to make a mess with the import command, so think through what you are doing first. SIOT does not prevent you from making a mess!

siot import --help for more details.

Example YAML file:

nodes:

- type: group

points:

- type: description

text: "group 1"

children:

- type: variable

points:

- type: description

text: var 1

- type: value

value: 10

Status

The Simple IoT project is still in a heavy development phase. Most of the core concepts are stable, but APIs, packet formats, and implementation will continue to change for some time yet. SIOT has been used in several production systems to date with good success, but be prepared to work with us (report issues, help fix bugs, etc.) if you want to use it now.

Handling of high rate sensor data

Currently each point change requires quite a bit computation to update the HASH values in upstream graph nodes. For repetitive data, this is not necessary as new values are continually coming in, so we will at some point make an option to specify points values as repetitive. This will allow SIOT to scale to more devices and higher rate data.

User Interface

The web UI is currently polling the SIOT backend every 4 seconds via HTTP. This works OK for small datasets, but uses more data than necessary and has a latency of up to 4 seconds. Long term we will run a NATS client in the frontend over a WebSocket so the UI response is real-time and new data gets pushed to the browser.

Security

Currently, and device that has access to the system can write or write to any data in the system. This may be adequate for small or closed systems, but for larger systems, we need per-device authn/authz. See issue #268, PR #283, and our security document for more information.

Errata

Any issues we find during testing we log in GitHub issues, so if you encounter something unexpected, please search issues first. Feel free to add your observations and let us know if an issues is impacting you. Several issues to be aware of:

- We don’t handle loops in the graph tree yet. This will render the instance unusable and you’ll have to clean the database and start over.

Frequently Asked Questions

Q: How is SIOT different than Home Assistant, OpenHAB, Domoticz, etc.?

Although there may be some overlap and Simple IoT may eventually support a number of off the shelf consumer IoT devices, the genesis, and intent of the project is for developing IoT products and the infrastructure required to support them.

Q: How is SIOT different than Particle.io, etc.?

Particle.io provides excellent infrastructure to support their devices and solve many of the hard problems such as remote firmware update, getting data securely from device to cloud, and efficient data bandwidth usage. But, they don’t provide a way to provide a user facing portal for a product that customers can use to see data and interact with the device.

Q: How is SIOT different than AWS/Azure/GCP/… IoT?

SIOT is designed to be simple to develop and deploy without a lot of moving parts. We’ve reduced an IoT system to a few basic concepts that are exactly the same in the cloud and on edge devices. This symmetry is powerful and allows us to easily implement and move functionality wherever it is needed. If you need Google Scale, SIOT may not be the right choice; however, for smaller systems where you want a system that is easier to develop, deploy, and maintain, consider SIOT.

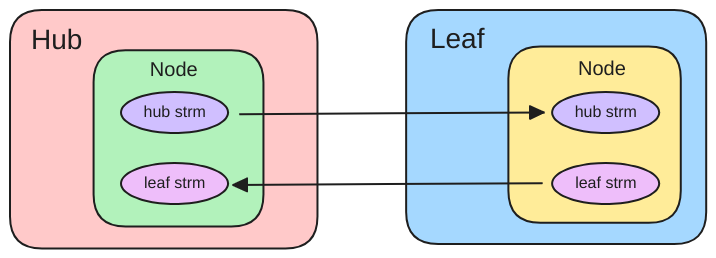

Q: Can’t NATS JetStream do everything SIOT does?

This is a good question and I’m not sure yet. NATS has some very interesting features like JetStream which can queue data and store data in a key-value store and data can be synchronized between instances. NATS also has a concept of leaf-nodes, which conceptually makes sense for edge/gateway connections. JetStream is optimized for data flowing in one direction (ex: orders through fulfillment). SIOT is optimized for data flowing in any direction and data is merged using data structures with CRDT (conflict-free replicated data types) properties. SIOT also stores data in a DAG (directed acyclic graph) which allows a node to be a child of multiple nodes, which is difficult to do in a hierarchical namespace. Additionally, each node is defined by an array of points and modifications to the system are communicated by transferring points. SIOT is a batteries included complete solution for IoT solutions, including a web framework, clients for various types of IO (ex: Modbus) and cloud services (ex: Twilio). We will continue to explore using more of NATS core functionality as we move forward.

Documentation

Good documentation is critical for any project and to get good documentation, the process to create it must be as frictionless as possible. With this in mind, we’ve structured SIOT documentation as follows:

- Markdown is the primary source format.

- Documentation lives in the same repo as the source code. When you update the code, update the documentation at the same time.

- Documentation is easily viewable in GitHub, or our generated docs site. This allows any snapshot of SIOT to contain a viewable snapshot of the documentation for that revision.

mdbookis used to generate the documentation site.- All diagrams are stored in a

single draw.io

file. This allows you to easily see what diagrams are available and easily

copy pieces from existing diagrams to make new ones. Then generate a PNG for

the diagram in the

images/directory in the relevant documentation directory.

Vision

This document attempts to outlines the project philosophy and core values. The basics are covered in the readme. As the name suggests, a core value of the project is simplicity. Thus, any changes should be made with this in mind. Although this project has already proven useful on several real-world project, it is a work in progress and will continue to improve. As we continue to explore and refine the project, many things are getting simpler and more flexible. This process takes time and effort.

“When you first start off trying to solve a problem, the first solutions you come up with are very complex, and most people stop there. But if you keep going, and live with the problem and peel more layers of the onion off, you can often times arrive at some very elegant and simple solutions.” - Steve Jobs

Guiding principles

- Simple concepts are flexible and scale well.

- IoT systems are inherently distributed, and distributed systems are hard.

- There are more problems to solve than people to solve them, thus it makes sense to collaborate on the common technology pieces.

- There are a lot of IoT applications that are not Google scale (10-1000 device range).

- There is significant opportunity in the long tail of IoT, which is our focus.

- There is value in custom solutions (programming vs drag-n-drop).

- There is value in running/owning our own platform.

- A single engineer should be able to build and deploy a custom IoT system.

- We don’t need to spend excessive amounts of time on operations. For smaller deployments, we deploy one binary to a cloud server and we are done with operations. We don’t need 20 microservices when one monolith will work just fine.

- For many applications, a couple of hours of down time is not the end of the world. Thus, a single server that can be quickly rebuilt as needed is adequate and in many cases more reliable than complex systems with many moving parts.

Technology choices

Choices for the technology stack emphasize simplicity, not only in the language, but just as important, in the deployment and tooling.

- Backend

- Go

- Simple language and deployment model

- Nice balance of safety + productivity

- Excellent tooling and build system

- See this thread for more discussion/information

- Go

- Frontend

- Single Page Application (SPA) architecture

- Fits well with real-time applications where data is changing all the time

- Easier to transition to Progressive Web Apps (PWA)

- Elm

- Nice balance of safety + productivity

- Excellent compiler messages

- Reduces possibility for run time exceptions in browser

- Does not require a huge/complicated/fragile build system typical in JavaScript frontends.

- excellent choice for SPAs

- elm-ui

- What if you never had to write CSS again?

- Fun, yet powerful way to lay out a user interface and allows you to efficiently make changes and get the layout you want.

- Single Page Application (SPA) architecture

- Database

- SQLite

- See Store

- Eventually support multiple database backends depending on scaling/admin needs

- SQLite

- Cloud Hosting

- Any machine that provides ability run long-lived Go applications

- Any MAC/Linux/Windows/rPI/Beaglebone/Odroid/etc. computer on your local network.

- Cloud VMs: Digital Ocean, Linode, GCP compute engine, AWS EC2, etc. Can easily host on a $5/mo instance.

- Edge Devices

- Any device that runs Linux (rPI, Beaglebone-black, industrial SBCs, your custom hardware …)

In our experience, simplicity and good tooling matter. It is easy to add features to a language, but creating a useful language/tooling that is simple is hard. Since we are using Elm on the frontend, it might seem appropriate to select a functional language like Elixir, Scala, Clojure, Haskell, etc. for the backend. These environments are likely excellent for many projects, but are also considerably more complex to work in. The programming style (procedural, functional, etc.) is important, but other factors such as simplicity/tooling/deployment are also important, especially for small teams who don’t have separate staff for backend/frontend/operations. Learning two simple languages (Go and Elm) is a small task compared to dealing with huge languages, fussy build tools, and complex deployment environments.

This is just a snapshot in time - there will likely be other better technology choices in the future. The backend and frontend are independent. If either needs to be swapped out for a better technology in the future, that is possible.

Architecture

This document describes how the Simple IoT project fulfills the basic requirements as described in the top level README.

There are two levels of architecture to consider:

- System: how multiple SIOT instances and other applications interact to form a system.

- Application: how the SIOT application is structured.

- Clients: all about SIOT clients where most functionality is implemented.

High Level Overview

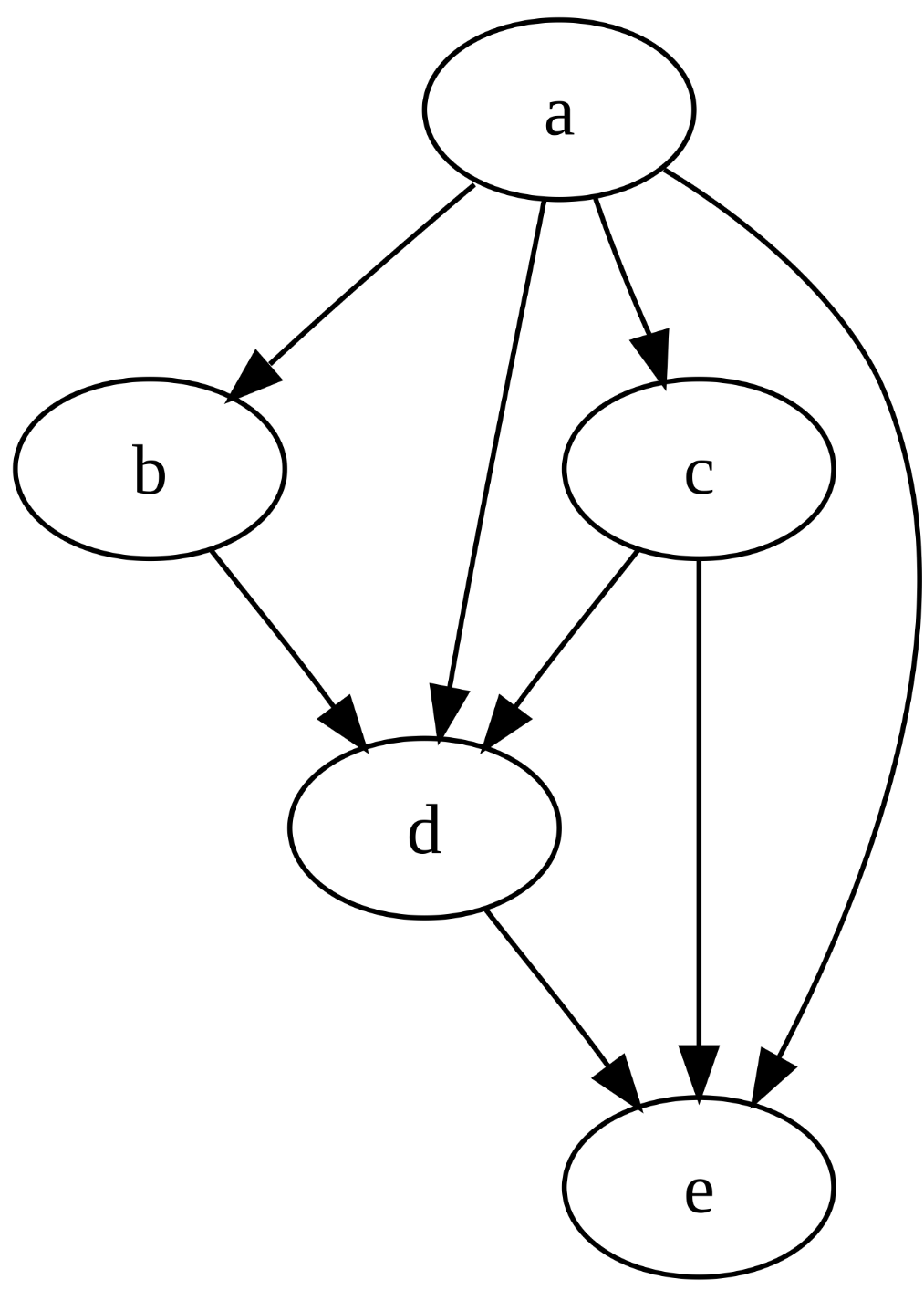

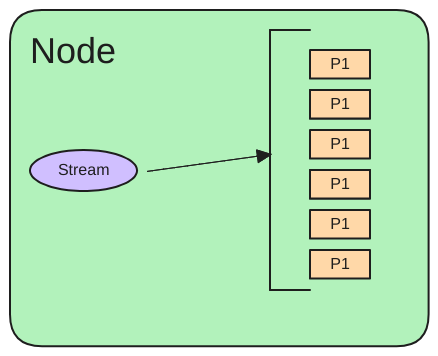

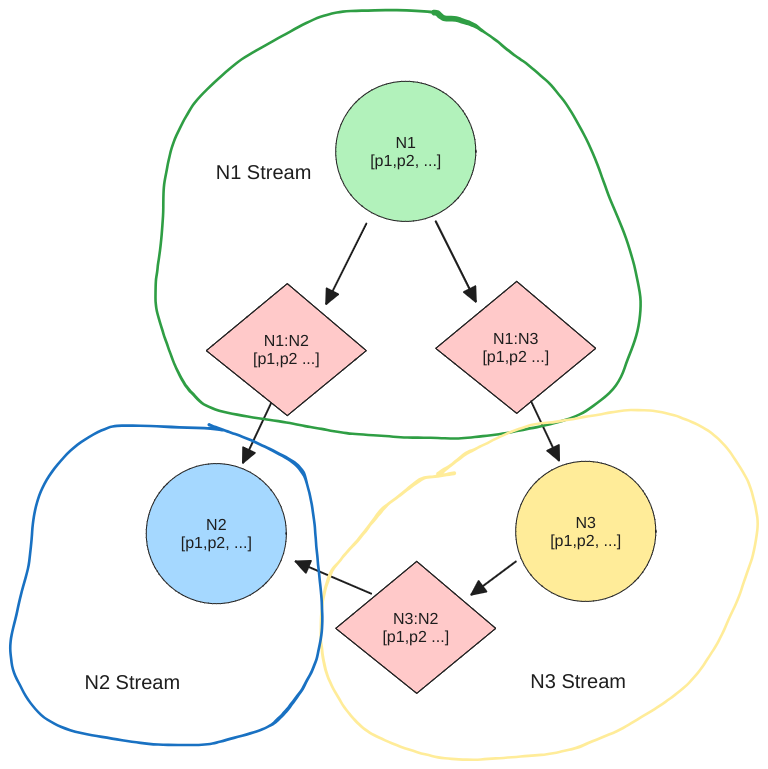

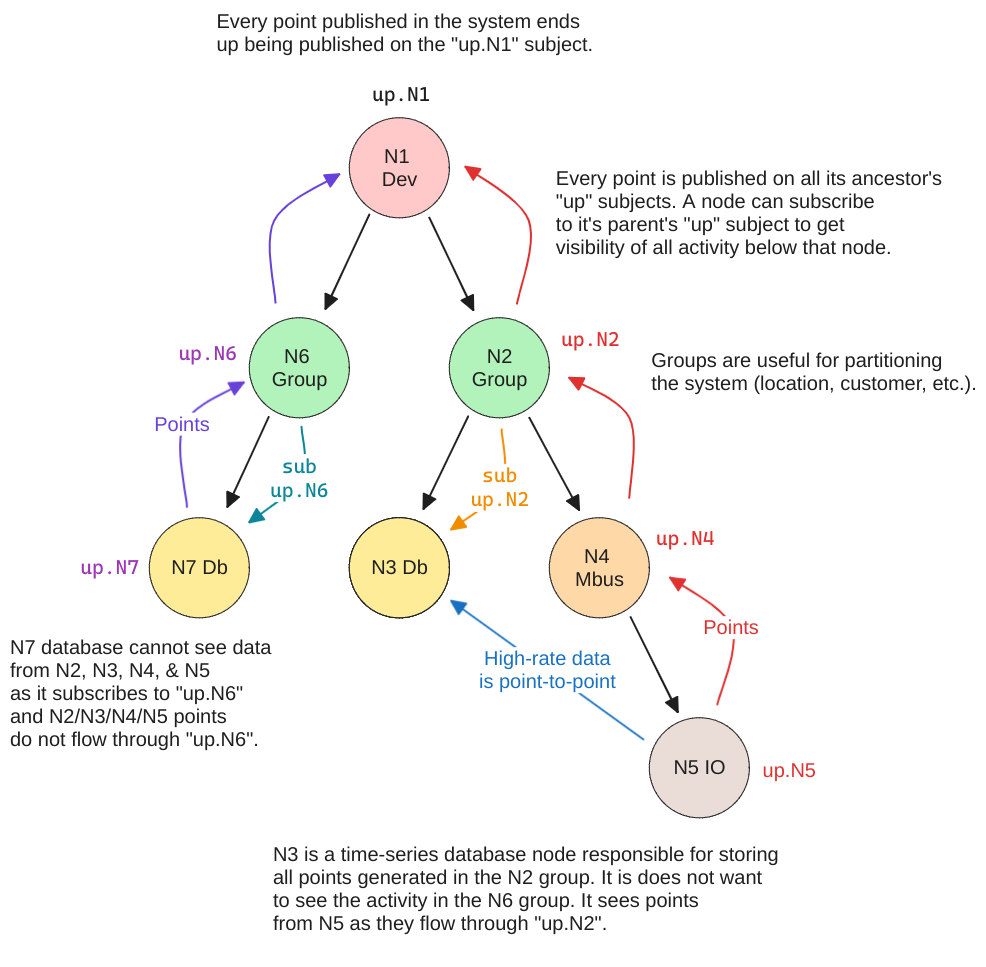

Simple IoT functions as a collection of connected, distributed instances that communicate via NATS. Data in the system is represented by nodes which contain an array of points. Data changes are communicated by sending points within an instance or between instances. Points in a node are merged such that newer points replace older points. This allows granular modification of a node’s properties. Nodes are organized in a DAG (directed acyclic graph). This graph structure defines many properties of the system such as what data users have access to, the scope of rules and notifications, and which nodes external services apply to. Most functionality in the system is implemented in clients, which subscribe and publish point changes for nodes they are interested in.

System Architecture

Contents

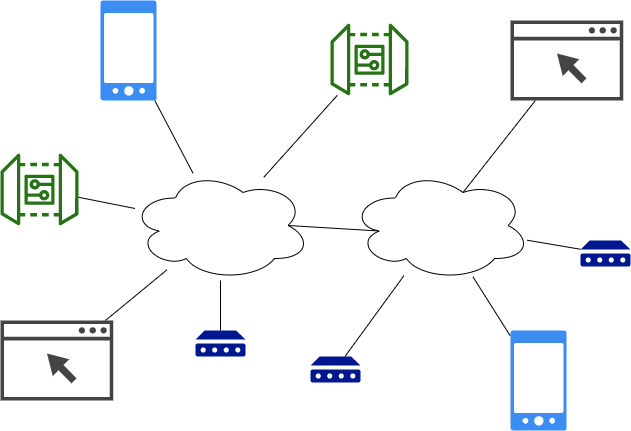

IoT Systems are distributed systems

IoT systems are inherently distributed where data needs to be synchronized between a number of different systems including:

- Cloud (one to several instances depending on the level of reliability desired)

- Edge devices (many instances)

- User Interface (phone, browser)

Typically, the cloud instance stores all the system data, and the edge, browser, and mobile devices access a subset of the system data.

Extensible architecture

Any siot app can function as a standalone, client, server or both. As an

example, siot can function both as an edge (client) and cloud apps (server).

- Full client: full SIOT node that initiates and maintains connection with another SIOT instance on a server. Can be behind a firewall, NAT, etc.

- Server: needs to be on a network that is accessible by clients

We also need the concept of a lean client where an effort is made to minimize the application size to facilitate updates over IoT cellular networks where data is expensive.

Device communication and messaging

In an IoT system, data from sensors is continually streaming, so we need some type of messaging system to transfer the data between various instances in the system. This project uses NATS.io for messaging. Some reasons:

- Allows us to push real-time data to an edge device behind a NAT, on cellular network, etc. - no public IP address, VPN, etc. required.

- Is more efficient than HTTP as it shares one persistent TCP connection for all messages. The overhead and architecture is similar to MQTT, which is proven to be a good IoT solution. It may also use less resources than something like observing resources in CoAP systems, where each observation requires a separate persistent connection.

- Can scale out with multiple servers to provide redundancy or more capacity.

- Is written in Go, so possible to embed the server to make deployments simpler for small systems. Also, Go services are easy to manage as there are no dependencies.

- Focus on simplicity - values fit this project.

- Good security model.

For systems that only need to send one value several times a day, CoAP is probably a better solution than NATS. Initially we are focusing on systems that send more data - perhaps 5-30MB/month. There is no reason we can’t support CoAP as well in the future.

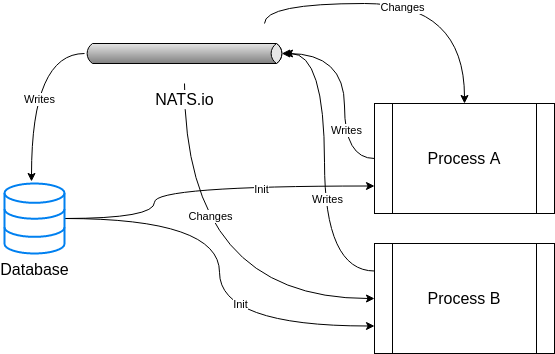

Data modification

Where possible, modifying data (especially nodes) should be initiated over NATS vs direct db calls. This ensures anything in the system can have visibility into data changes. Eventually we may want to hide db operations that do writes to force them to be initiated through a NATS message.

Simple, Flexible data structures

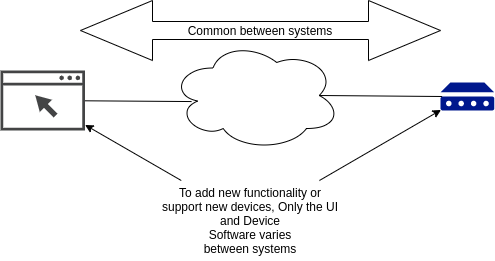

As we work on IoT systems, data structures (types) tend to emerge. Common data structures allow us to develop common algorithms and mechanism to process data. Instead of defining a new datatype for each type of sensor, define one type that will work with all sensors. Then the storage (both static and time-series), synchronization, charting, and rule logic can stay the same and adding functionality to the system typically only involves changing the edge application and the frontend UI. Everything between these two end points can stay the same. This is a very powerful and flexible model as it is trivial to support new sensors and applications.

See Data for more information.

Node Tree

The same Simple IoT application can run in both the cloud and device instances. The node tree in a device would then become a subset of the nodes in the cloud instance. Changes can be made to nodes in either the cloud or device and data is synchronized in both directions.

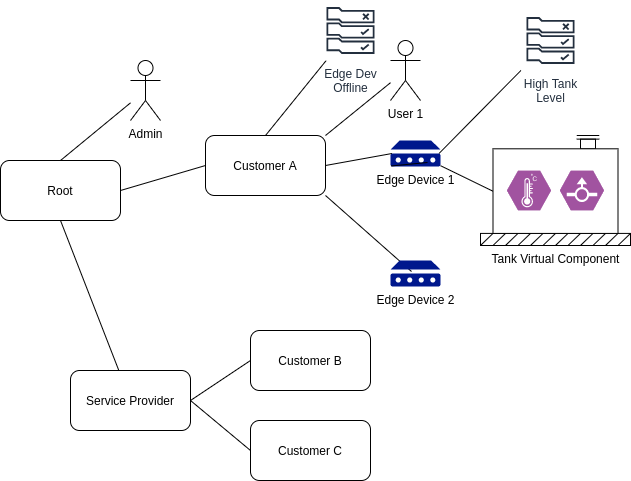

The following diagram illustrates how nodes might be arranged in a typical system.

A few notes this structure of data:

- A user has access to its child nodes, parent nodes, and parent node descendants (parents, children, siblings, nieces/nephews).

- Likewise, a rule node processes points from nodes using the same relationships described above.

- A user can be added to any node. This allows permissions to be granted at any level in the system.

- A user can be added to multiple nodes.

- A node admin user can configure nodes under it. This allows a service provider to configure the system for their own customers.

- If a point changes, it triggers rules of upstream nodes to run (perhaps paced to some reasonable interval)

- The Edge Dev Offline rule will fire if any of the Edge devices go offline. This allows us to only write this rule once to cover many devices.

- When a rule triggers a notification, the rule node and any upstream nodes can optionally notify its users.

The distributed parts of the system include the following instances:

- Cloud (could be multiple for redundancy). The cloud instances would typically store and synchronize the root node and everything under it.

- Edge Devices (typically many instances (1000’s) connected via low bandwidth cellular data). Edge instances would store and synchronize the edge node instance and descendants (ex Edge Device 1)

- Web UI (potentially dozens of instances connected via higher bandwidth browser connection).

As this is a distributed system where nodes may be created on any number of connected systems, node IDs need to be unique. A unique serial number or UUID is recommended.

Application Architecture

Contents

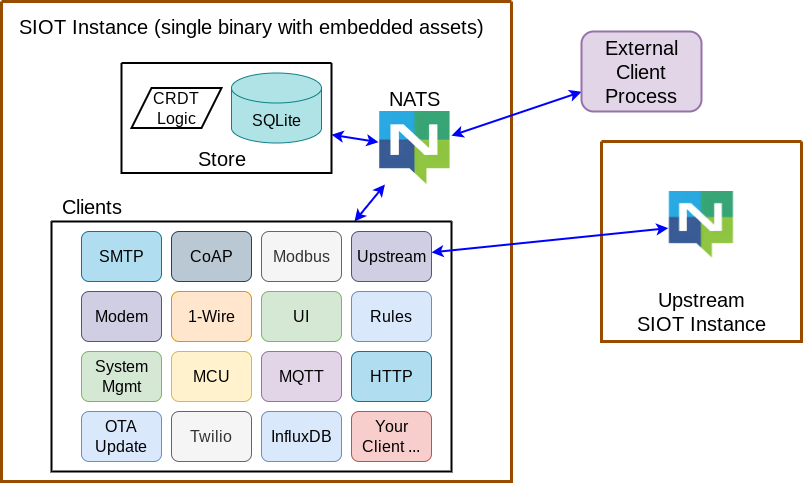

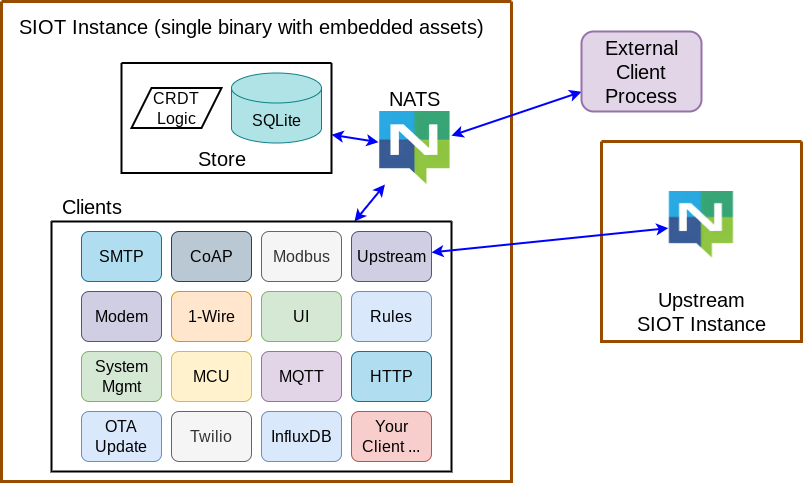

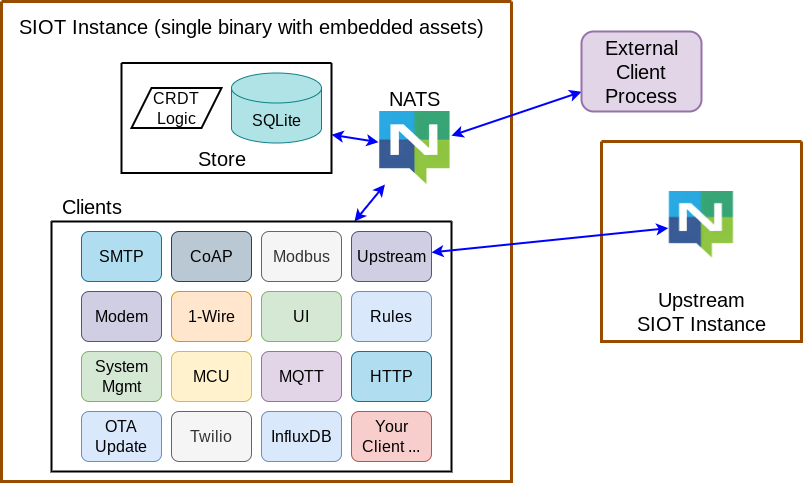

The Simple IoT Go application is a single binary with embedded assets. The database and NATS server are also embedded by default for easy deployment. There are five main parts to a Simple IoT application:

- NATS Message Bus: all data goes through this making it very easy to observe the system.

- Store: persists the data for the system, merges incoming data, maintains node hash values for synchronization, rules engine, etc. (the rules engine may eventually move to a client)

- Clients: interact with other devices/systems such as Modbus, 1-wire, etc. This is where most of the functionality in a SIOT system lives, and where you add your custom functionality. Clients can exist inside the Simple IoT application or as external processes written in any language that connect via NATS. Clients are represented by a node (and optionally child nodes) in the SIOT store. When a node is updated, its respective clients are updated with the new information. Likewise, when a client has new information, it sends that out to be stored and used by other nodes/instances as needed.

- HTTP API: provides a way for HTTP clients to interact with the system.

- Web UI: Provides a user interface for users to interact with the system. Currently it uses the HTTP API, but will eventually connect directly to NATS.

The simplicity of this architecture makes it easy to extend with new functionality by writing a new client. Following the constraints of storing data as nodes and points ensures all data is visible and readable by other clients, as well as being automatically synchronized to upstream instances.

Application Lifecycle

Simple IoT uses the

Run()/Stop()

pattern for any long running processes. With any long running process, it is

important to not only Start it, but also to be able to cleanly Stop it. This is

important for testing, but is also good practice. Nothing runs forever so we

should never operate under this illusion. The

oklog/run packaged is used to start and

shutdown these processes concurrently. Dependencies between processes should be

minimized where possible through retries. If there are hard dependencies, these

can be managed with WaitStart()/WaitStop() functions. See

server.go

for an example.

NATS lends itself very well to a decoupled application architecture because the NATS clients will buffer messages for some time until the server is available. Thus, we can start all the processes that use a NATS client without waiting for the server to be available first.

Long term, a NATS API that indicates the status of various parts (rules engine, etc.) of the system would be beneficial. If there are dependencies between processes, this can be managed inside the process instead of in the code that starts/stops the processes.

NATS Integration

The NATS API details the NATS subjects used by the system.

Echo concerns

Any time you potentially have two sources modifying the same resource (a node), you need to be concerned with echoed messages. This is a common occurrence in Simple IoT. Because another resource may modify a node, typically a client needs to subscribe to the node messages as well. This means when it sends a message, it will typically be echoed back. See the client documentation for ideas on how to handle the echo problem.

The

server.NewServer

function returns a NATS connection. This connection is used throughout the

application and does not have the NoEcho option set.

User Interface

Currently, the User Interface is implemented using a Single Page Architecture (SPA) Web Application. This keeps the backend and frontend implementations mostly independent. See User Interface and Frontend for more information.

There are many web architectures to chose from and web technology is advancing at a rapid pace. SPAs are not in vogue right now and more complex architectures are promoted such as Next.js, SveltKit, Deno Fresh, etc. Concerns with SPAs include large initial load and stability (if frontend code crashes, everything quits working). These concerns are valid if using JavaScript, but with Elm these concerns are minimal as Elm compiles to very small bundles, and run time exceptions are extremely rare. This allows us to use a simple web architecture with minimal coupling to the backend and minimal build complexity. And it will be a long time until we write enough Elm code that bundle size matters.

A decoupled SPA UI architecture is also very natural in Simple IoT, as IoT systems are inherently distributed. The frontend is just another client, much the same as a separate machine learning process, a downstream instance, a scripting process, etc.

Simple IoT Clients

Contents

Most functionality in Simple IoT is implemented in Clients.

Each client can be configured by one or more nodes in the SIOT store graph. These nodes may be created by a user, a process that detects new plug and play hardware, or other clients.

A client interacts with the system by listening for new points it is interested in and sending out points as it acquires new data.

Creating new clients

See Development for information on how to set up a development system.

Simple IoT provides utilities that assist in creating new clients. See the Go package documentation for more information. A client manager is created for each client type. This manager instantiates new client instances when new nodes are detected and then sends point updates to the client. Two levels of nodes are currently supported for client configuration. An example of this would be a Rule node that has Condition and Action child nodes.

A “disabled” option is useful and should be considered for every new client.

Creating a new client typically requires the following steps:

- Add any new node and points types to

schema.go,Node.elm, andPoint.elm. Please try to reuse existing point types when possible. - Create a new client in

client/directory. A client is defined by a type that satisfies theClient interface. A constructor must also be defined that is passed toNewManagerand a struct that represents the client data. The name of the struct must match the node type - for instance a node of typecanBusneeds to be defined by a struct namedCanBus. Additionally, each field of the client struct must have point tags. This allows us to automatically create and modify client structs from arrays of node points. - Create a new manager for the client in

client/client.go - Create an Elm UI for the client in

frontend/src/Components/ - Create plumbing for new

NodeXYZinfrontend/src/Pages/Home_.elm. Note, this can likely be improved a lot.

It is easiest to copy one of the existing clients to start. The NTP client is relatively simple and may be a good example.

Client life-cycle

It is important the clients cleanly implement the Run()/Stop() pattern and shut down cleanly when Stop() is called releasing all resources. If nodes are added or removed, clients are started/stopped. Additionally, if a child node of a client config is added or removed, the entire client is stopped and then restarted. This relieves the burden on the client from managing the addition/removal of client functionality. Thus, it is very important that clients stop cleanly and release resources in case they are restarted.

Message echo

Clients need to be aware of the “echo” problem as they typically subscribe as

well as publish to the points subject for the nodes they manage. When they

publish to these subjects, these messages will be echoed back to them. There are

several solutions:

- Create a new NATS connection for the client with the

NoEchooption set. For this to work, each client will need to establish its own connection to the server. This may not work in cases where subjects are aliased into authenticated subject namespaces. - Inspect the

PointOriginfield - if is blank, then it was generated by the node that owns the point and does not need to be processed by the client generating the data for that node. If is not blank, then the Point was generated by a user, rule, or something other than the client owning the node and must be processed. This may not always work - example: user is connected to a downstream instance and modifies a point that then propagates upstream- it may get echoed back to an authenticated client.

- (investigation stage) A NATS messages header can be populated with the ID of the client that sent the message. If it is an authenticated client, then the message will not be echoed on the authenticated client subject namespace of the same ID. This information is not stored, so cannot be used for auditing purposes.

The SIOT client manager filters out points for the following two scenarios:

- A point with the same ID as the client and Origin set to a blank string.

- A point received for a client where Origin matches the client root node ID.

Thus, if you want to set a point in one client and get that point to another node client, you must set the Origin field. This helps ensure that the Origin field is used consistently as otherwise stuff won’t work.

This gets a little tricky for clients that manage a node and its children (for instance the rule client - it has condition and action child nodes). If we follow the following rule:

Clients must set the point Origin field for any point sent to anything other than its root node.

If we following the above rule, then things should work. We may eventually provide clients with a function to send points that handles this automatically, but for now it is manual.

See also tracking who made changes.

Development

Go Package Documentation

The Simple IoT source code is available on GitHub.

Simple IoT is written in Go. Go package documentation is available.

Building Simple IoT

Requirements:

- Go

- Node/NPM

Simple IoT build has currently been testing on Linux and MacOS systems. See

envsetup.sh

for scripts used in building.

To build:

source envsetup.shsiot_setupsiot_build

Developing Simple IoT

npm install -g run-pty. envsetup.shsiot_setupsiot_watch

The siot_watch command can be used when developing Simple IoT. This does the

following:

- Starts

elm-watchon the Elm code.elm-watchwill automatically update the UI without losing state any time an Elm file changes. - Runs the Go backend and rebuilds it anytime a Go module changes (only tested on Linux and MacOS, but should be easy to set up Windows as well)

Both of the above are run in a run-pty

wrapper, which allows you to see the output of either process. The output of the

Elm compile is displayed in the browser, so it is rarely necessary to view the

elm-watch side.

Using Simple IoT as a library

Simple IoT can be used a library for your custom application. The SIOT main.go illustrates how to start the SIOT server, and add clients. You can do this from any Go application. With a few lines of code, this gives you a lot of functionality including a NATS server.

Developing a new SIOT client

Most SIOT functionality is implemented in clients. See the client documentation for more information.

Customizing the UI

Currently, there is no simple way to customize the SIOT UI when using SIOT as a library package. Forking and changing the SIOT Elm code is probably the simplest way if you want to make a small change now.

In the future, we plan to provide an API for passing in a custom UI to the SIOT Server. You can also implement a custom HTTP client that serves up a custom UI.

Code Organization

Currently, there are a lot of subdirectories. One reason for this is to limit

the size of application binaries when building edge/embedded Linux binaries. In

some use cases, we want to deploy app updates over cellular networks, therefore

we want to keep packages as small as possible. For instance, if we put the

natsserver stuff in the nats package, then app binaries grow a couple MB,

even if you don’t start a NATS server. It is not clear yet what Go does for dead

code elimination, but at this point, it seems referencing a package increases

the binary size, even if you don’t use anything in it. (Clarification welcome!)

For edge applications on Embedded Linux, we’d eventually like to get rid of net/HTTP, since we can do all network communications over NATS. We’re not there yet, but be careful about pulling in dependencies that require net/HTTP into the NATS package, and other low level packages intended for use on devices.

Directories

See Go docs directory descriptions

Coding Standards

Please run siot_test from envsetup.sh before submitting pull requests. All

code should be formatted and linted before committing.

Please configure your editor to run code formatters:

- Go:

goimports - Elm:

elm-format - Markdown:

prettier(note, there is a.prettierrcin this project that configures prettier to wrap markdown to 80 characters. Whether to wrap markdown or not is debatable, as wrapping can make diffs harder to read, but Markdown is much more pleasant to read in an editor if it is wrapped. Since more people will be reading documentation than reviewing, let’s optimize for the reading in all scenarios - editor, GitHub, and generated docs)

Pure Go

We plan to keep the main Simple IoT application a pure Go binary if possible. Statically linked pure Go has huge advantages:

- You can easily cross compile to any target from any build machine.

- Blazing fast compile times